Nginx (pronounced “engine-x”) is versatile, open-source software primarily functioning as a high-performance web server, reverse proxy, load balancer, and HTTP cache. It was initially created by Igor Sysoev to solve the challenge of handling massive numbers of simultaneous user connections reliably.

This guide delves into what Nginx is, exploring its core architecture and explaining how it achieves its impressive performance. We’ll uncover its main functions, outline the key benefits it offers, compare it briefly with alternatives like Apache, and give you a basic understanding to get started.

What is Nginx?

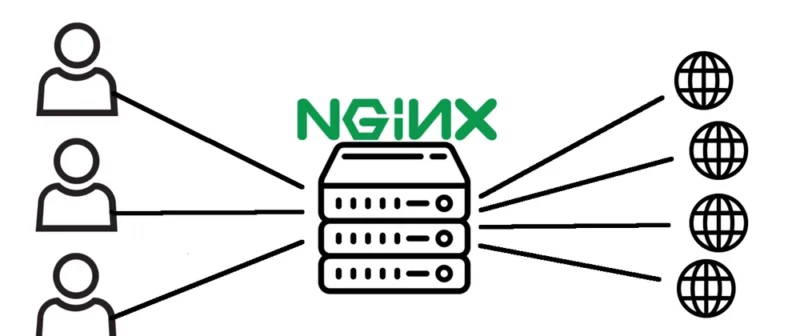

Nginx (pronounced “Engine-X”) is a high-performance, open-source web server and reverse proxy server. It is designed to handle high traffic loads, serve static content quickly, and manage proxying and load balancing efficiently. Nginx is commonly used for serving websites, as a reverse proxy to distribute load between application servers, and as a load balancer to ensure that traffic is distributed across multiple servers for optimal performance and reliability.

Some of the core features of Nginx include:

- Web Server: It serves static files (such as HTML, CSS, and images) efficiently. It can handle thousands of simultaneous connections with low resource consumption.

- Reverse Proxy: Nginx can act as a reverse proxy, forwarding client requests to an upstream server (like Apache, or an application server running a web framework like Django or Node.js). This improves security, load balancing, and scalability.

- Load Balancer: Nginx can distribute incoming traffic across multiple backend servers to balance the load and ensure that no single server is overwhelmed. It supports different load balancing algorithms like round-robin, least connections, and IP-hash.

- SSL/TLS Termination: Nginx can terminate SSL/TLS connections, offloading the computationally expensive task of encrypting and decrypting traffic from the backend servers.

- Caching: Nginx can cache responses from backend servers to speed up delivery of frequently requested content, reducing the load on those servers and improving response times.

- HTTP/2 Support: Nginx supports HTTP/2, a newer version of the HTTP protocol that improves performance, especially for websites with many assets like JavaScript, images, and CSS files.

- High Availability & Fault Tolerance: Nginx can be configured in a way that ensures uptime and reliability, including handling server failures and maintaining traffic routing without service disruption.

How Nginx Works

The magic behind Nginx’s performance lies significantly in its fundamental design, known as its architecture. Unlike some traditional web servers, Nginx employs a highly efficient model to manage incoming user requests and server resources, allowing it to handle immense traffic loads gracefully.

The Event-Driven, Asynchronous Model: Nginx’s Secret Sauce

Nginx utilizes an event-driven, asynchronous, non-blocking architecture. This sounds complex, but think of it as handling tasks without waiting idly. Traditional models might dedicate one worker (process or thread) per connection, which can consume significant resources when many users connect simultaneously.

Instead, Nginx worker processes listen for ‘events’ on multiple connections at once. An event could be a new incoming connection, data arriving from a user, or readiness to send data back. This asynchronous approach means Nginx doesn’t get stuck waiting for one slow operation to complete.

It uses non-blocking Input/Output (I/O) operations. When a worker needs to read or write data, it initiates the operation and can immediately switch to handling other events if the I/O isn’t instantly ready. This avoids wasted time and resources tied up in waiting.

Operating systems provide mechanisms like epoll (on Linux) or kqueue (on FreeBSD/macOS) that efficiently notify Nginx about which connections have pending events. Nginx leverages these tools to manage thousands of connections per worker process with minimal overhead, contributing directly to its renowned performance.

Master and Worker Processes Explained

Nginx operates using a central master process and several worker processes. The master process typically runs with higher privileges (like the ‘root’ user). Its main jobs include reading and validating the configuration files, binding to network ports (like port 80 for HTTP), and managing the worker processes.

The master process spawns one or more worker processes. These workers run under a less privileged user account for enhanced security. It’s the worker processes that actually handle the incoming client connections and requests, doing the heavy lifting of serving content or proxying requests.

This separation allows for graceful operations. For instance, you can reload Nginx configuration without dropping active connections because the master process manages the transition, signaling workers to finish old requests and start using the new configuration seamlessly for subsequent requests.

This multi-process model, combined with the event-driven architecture within each worker, is key to Nginx’s low memory and CPU footprint, even when handling tens of thousands of concurrent user sessions. It efficiently utilizes server hardware resources without the overhead of numerous threads or processes.

What is Nginx Used For?

Nginx isn’t just one thing; its versatility is a major reason for its popularity. It can wear multiple hats within your web infrastructure, often simultaneously. Understanding these core functions helps appreciate why it’s deployed in so many different scenarios across the internet today.

As a High-Performance Web Server

In its most fundamental role, Nginx acts as a web server. This means it directly handles requests from users’ browsers and serves the requested files. It’s particularly adept at delivering static content – files like HTML pages, CSS stylesheets, JavaScript code, and images.

Its event-driven architecture makes it incredibly fast at serving these static assets, often significantly faster than older server models, especially under heavy load. This speed directly contributes to a quicker, more responsive experience for website visitors, enhancing user satisfaction and potentially improving SEO rankings.

While Nginx excels with static files, it also handles dynamic content. It doesn’t process dynamic languages like PHP or Python directly. Instead, it efficiently passes those requests to a separate backend application server (like PHP-FPM or a Python WSGI server) and then relays the generated response back to the client.

- Example: When you request a simple image (

logo.png), Nginx might serve it directly from the server’s disk. If you request your blog feed (/blog), Nginx passes this to a PHP processor, gets the dynamically generated HTML, and sends that HTML back to your browser.

As a Powerful Reverse Proxy

One of Nginx’s most common and powerful applications is acting as a reverse proxy. A reverse proxy sits in front of one or more web or application servers, intercepting incoming client requests before they reach the backend. It acts as a gateway or intermediary for your servers.

Think of it like a receptionist for your web servers. Clients talk to the receptionist (Nginx), who then directs the request to the correct department (backend server) and relays the response. This provides numerous benefits for security, performance, and reliability in your setup.

A key benefit is enhanced security. The reverse proxy shields the identity and characteristics of your backend servers from direct exposure to the internet. Nginx can handle tasks like SSL/TLS termination, decrypting secure HTTPS requests so backend servers don’t need to spend resources on encryption.

It also enables performance optimizations like caching frequently accessed content and compressing data before sending it to users, reducing bandwidth usage and speeding up load times. Nginx can serve cached content directly without bothering the backend, significantly reducing server load.

- Example: A user visits

https://mysecureapp.com. Nginx receives the request, handles the HTTPS decryption, perhaps checks for security threats, then forwards the plain HTTP request to an internal server like10.0.0.5:8080. The internal server processes it, sends the response back to Nginx, which then encrypts it and sends it to the user.

As an Efficient Load Balancer

Nginx functions superbly as a load balancer. In environments with multiple backend servers running the same application, a load balancer distributes incoming client requests intelligently across those servers. This prevents any single server from becoming overloaded and improves overall application responsiveness.

Imagine a popular online store during a major sale event. A single server might crash under the immense traffic. A load balancer like Nginx sits in front, spreading the incoming requests evenly across a pool of identical web servers (often called an upstream group or backend pool).

Nginx supports several load balancing methods (algorithms) to decide which backend server gets the next request. Common methods include:

- Round Robin: Distributes requests sequentially to each server in the list (Server 1, then Server 2, then Server 3, then back to 1). Simple and often the default.

- Least Connections: Sends the next request to the server currently handling the fewest active connections. Adapts better to varying request complexities.

- IP Hash: Ensures requests from the same client IP address consistently go to the same backend server (useful for maintaining user sessions).

Using Nginx as a load balancer significantly boosts application scalability (you can add more backend servers easily) and high availability (if one backend server fails, Nginx directs traffic to the healthy ones). This results in a more robust and reliable service for end-users.

- Example: A website has three backend servers (S1, S2, S3). Nginx, using round-robin, sends the first user to S1, the second to S2, the third to S3, the fourth back to S1, and so on, ensuring traffic is spread evenly across the available servers.

As an HTTP Cache

Nginx incorporates powerful HTTP caching capabilities, often used when acting as a reverse proxy. Caching involves storing copies of frequently requested content closer to the user (in this case, on the Nginx server itself) rather than fetching it from the backend server every single time.

When a user requests a resource (like an image or even a whole web page) that Nginx has previously cached and determined is still valid, Nginx can serve the response directly from its cache memory or disk. This is significantly faster than forwarding the request to the backend.

This caching dramatically reduces the latency experienced by the end-user, making the website feel much faster. It also substantially decreases the load on the backend application servers, allowing them to handle more unique requests or operate with fewer resources, saving costs and improving stability.

Nginx offers fine-grained control over caching behavior through configuration directives. You can specify what gets cached, for how long (TTL – Time To Live), under what conditions, and where the cache is stored (memory or disk), allowing optimization for specific application needs.

- Example: A news site’s homepage (

/) is requested frequently. Nginx is configured to cache it for 60 seconds. The first request goes to the backend, Nginx caches the response. For the next 60 seconds, subsequent requests for/are served instantly from Nginx’s cache, without hitting the backend application server.

Other Capabilities (Briefly)

While web serving, reverse proxying, load balancing, and caching are its most famous roles, Nginx’s flexibility extends further. It can also function effectively as a high-performance mail proxy server, handling email protocols like IMAP, POP3, and SMTP.

Additionally, through its stream module, Nginx can perform generic TCP and UDP proxying and load balancing. This allows it to manage traffic for various network applications beyond the standard web protocols, making it a versatile tool for broader network infrastructure tasks when needed.

Top 5 Benefits: Why Choose Nginx?

Given its multiple functions, what makes Nginx such a popular choice? Its widespread adoption isn’t accidental. Developers and system administrators choose Nginx for several compelling advantages that translate into tangible improvements for websites and applications. Let’s explore the top five benefits.

- Unmatched Performance & Speed: This is often the primary driver for choosing Nginx. Its event-driven architecture allows it to handle a vast number of simultaneous connections (high concurrency) with exceptional speed, especially when serving static content or acting as a proxy/load balancer. Data from W3Techs consistently shows Nginx powering a large percentage (often over 30-35%) of the world’s top websites, a testament to its performance capabilities under demanding loads.

- Excellent Scalability: Nginx scales remarkably well, both vertically (on more powerful hardware with more CPU cores) and horizontally (across multiple servers using its load balancing features). As your website or application traffic grows, Nginx can grow with you, efficiently utilizing available resources without becoming a performance bottleneck itself.

- High Resource Efficiency: Compared to older server models, Nginx generally consumes significantly less memory and CPU per connection. This efficiency is particularly noticeable under high concurrency scenarios. Lower resource usage means you can handle more traffic on the same hardware, potentially reducing infrastructure costs significantly.

- Proven Reliability & Stability: Nginx has a long track record of stability in demanding production environments. Its architecture minimizes resource leaks and crashes. Its widespread use by major internet companies (like Netflix, WordPress.com, Cloudflare) demonstrates its reliability for mission-critical services needing high uptime.

- Rich Features & Flexibility: Nginx boasts a wealth of built-in features and a modular design allowing further extension. It supports modern web protocols like HTTP/2, offers robust SSL/TLS capabilities, advanced load balancing options, URL rewriting, Gzip compression, rate limiting, access controls, and much more, providing immense flexibility through its configuration system.

Nginx vs. Apache: Understanding the Key Differences

When discussing web servers, the comparison between Nginx and the Apache HTTP Server (often just called “Apache”) inevitably comes up. Both are powerful, free, open-source web servers, but they have different strengths stemming from their distinct architectures and design philosophies. Understanding these helps choose the right tool.

The most fundamental difference lies in their connection handling architecture. As discussed, Nginx uses an event-driven, asynchronous model. Apache, historically, primarily used a process-driven or thread-driven model (like MPM prefork or worker), though it also has an event-based module (MPM event) now which bridges the gap somewhat.

Generally, Nginx excels and is often considered more performant when handling static content and managing a high number of concurrent connections due to its lower resource footprint per connection. Its architecture is inherently suited for tasks like reverse proxying and load balancing efficiently.

Apache, on the other hand, is renowned for its flexibility and powerful module system. Features like dynamic module loading and per-directory configuration using .htaccess files offer ease of use, especially in shared hosting environments, though .htaccess can sometimes impact performance if overused.

Configuration styles also differ. Nginx uses a declarative syntax focused on blocks (server, location). Apache uses directives often placed in hierarchical configuration files or .htaccess. Neither is inherently better, but developers often have a preference based on familiarity or perceived logical structure.

It’s crucial to note that Nginx and Apache aren’t mutually exclusive; they are often used together effectively. A common pattern involves using Nginx as the front-end server (handling static files, SSL, acting as a reverse proxy/load balancer) while Apache runs on the backend, perhaps handling dynamic content processing with specific modules.

Ultimately, the “better” choice depends entirely on the specific needs of your project. For high-concurrency static content delivery or proxying, Nginx often has an edge. For maximum configuration flexibility or specific module requirements, Apache might be preferred. Many modern deployments leverage Nginx’s strengths at the edge.

Nginx Open Source vs. Nginx Plus

First, be aware there are two main versions. Nginx Open Source is the free, widely used version developed collaboratively with the community and available at nginx.org. It contains the vast majority of features discussed here and powers millions of websites globally.

Nginx Plus is a commercial product offered by F5 (the company that acquired Nginx, Inc.). It builds on the open-source version, adding enterprise-grade features like advanced load balancing algorithms, active health checks for backend servers, enhanced monitoring dashboards, a real-time configuration API, and dedicated commercial support.

For most users, especially when starting, the powerful Nginx Open Source version is more than sufficient. Nginx Plus is typically considered for complex, large-scale deployments with specific needs for its advanced features and support requirements. You can always start with open source and evaluate Plus later if needed.

Installation

Installing Nginx is usually straightforward on most Linux distributions. It’s typically available through standard package managers. For example, on Ubuntu/Debian systems, you can often install it using a command like sudo apt update && sudo apt install nginx.

On CentOS/RHEL/Fedora systems, the command would likely be sudo yum install epel-release && sudo yum install nginx or sudo dnf install nginx. Pre-compiled binaries and installation instructions for other operating systems, including Windows and macOS, are available on the official Nginx website (nginx.org).

Frequently Asked Questions (FAQ) about Nginx

1. Is Nginx free?

Yes, the core Nginx Open Source software is completely free to use, modify, and distribute under a permissive BSD-like license. There is also a commercial version, Nginx Plus, offered by F5 with additional enterprise features and support, which requires a paid subscription.

2. Can Nginx handle dynamic websites directly?

No, not directly in the sense of executing code like PHP or Python itself. Nginx excels at serving static files. For dynamic content, it efficiently proxies the request to a dedicated backend application server (like PHP-FPM, a Node.js server, or a Python WSGI/ASGI server) which processes the code and returns the result for Nginx to deliver.

3. Is Nginx better than Apache?

Neither is definitively “better”; they have different strengths. Nginx generally performs better under high concurrency and excels at static content delivery and proxying. Apache offers great flexibility, a vast module ecosystem, and easy configuration via .htaccess. The best choice depends on your specific needs, and they are often used together.

4. What is Nginx primarily used for?

Its most common uses today include acting as a high-performance web server (especially for static content), a reverse proxy (for security, SSL termination, caching), and a load balancer (to distribute traffic across multiple backend servers), often performing these roles simultaneously.

5. Is Nginx difficult to configure?

Basic configurations for simple websites or proxy setups are relatively straightforward once you understand the block structure (server, location) and common directives. However, complex configurations involving intricate rewrite rules, advanced caching, or custom modules can become quite challenging and require careful reading of the documentation.