Edge computing is a way of processing data near where it is created, instead of sending it far away to a central server. This approach brings computation and data storage closer to the devices and users that need them.

Ever wondered why your smart devices respond so quickly, or how things like self-driving cars make split-second decisions? Often, the answer lies in a powerful technology called edge computing. It might sound complex, but it’s revolutionizing how we process data by bringing computation closer to where it’s needed most.

If you’ve heard the term but aren’t quite sure what it means, you’re in the right place. This article breaks down exactly what edge computing is, why it’s becoming so important, how it works, and the real-world benefits it offers. We’ll explore its uses and how it relates to other technologies like the cloud and 5G.

Defining Edge Computing: Beyond the Buzzword

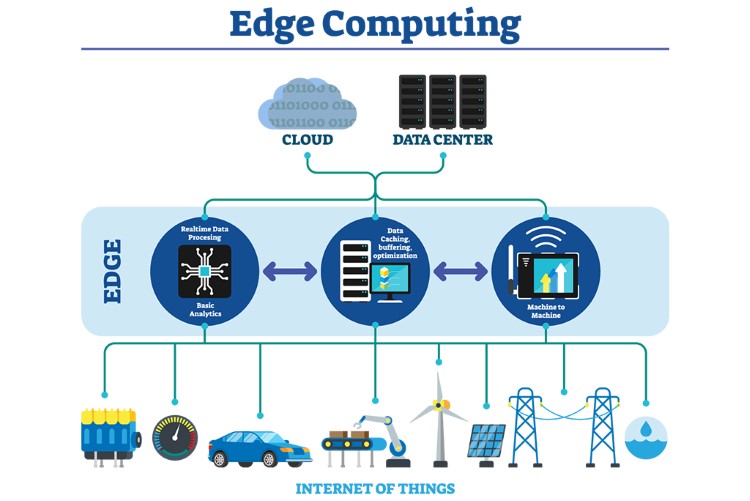

Edge computing fundamentally means performing computation closer to the edge of the network, near the data source. This contrasts with traditional cloud computing, where data travels to large, centralized data centers for processing.

Think of it like having mini-data centers distributed geographically. Instead of all information flowing back to a central headquarters (the cloud), many tasks are handled by local “branch offices” (the edge) that are closer to the action.

This “edge” isn’t one specific place. It refers to any computing resource located between the data source (like a sensor or your phone) and the main cloud data center. It could be the device itself, a local server in a factory, or specialized hardware nearby.

The core idea is simple: reduce the distance data needs to travel. By processing information locally, edge computing aims to overcome limitations inherent in relying solely on centralized cloud infrastructure for certain tasks.

Why Do We Need Edge Computing? The Problem with the Cloud Alone

While cloud computing offers immense power and storage, sending all data to a distant cloud server isn’t always practical or efficient. Certain applications face challenges that edge computing directly addresses.

These challenges primarily revolve around speed, data volume, and the need for immediate action. Relying solely on the cloud can lead to delays and inefficiencies in specific scenarios. Let’s look at the main drivers for edge adoption.

The Latency Challenge

Latency is the delay it takes for data to travel from its source to the processing center and back. When data has to travel hundreds or thousands of miles to a cloud data center, even small delays add up.

For many applications, like Browse a website, a slight delay is acceptable. However, for others, it’s a critical failure point. Imagine a self-driving car needing to instantly detect and react to an obstacle. Waiting for data to reach the cloud and return could be catastrophic.

Similarly, industrial control systems monitoring high-speed machinery require near-instantaneous feedback. Remote surgery using robotics demands minimal lag. Interactive applications like cloud gaming or Virtual Reality (VR) suffer from high latency, leading to poor user experiences. Edge computing minimizes this travel time by processing data nearby.

Bandwidth Bottlenecks

Bandwidth refers to the amount of data that can be transferred over a network connection in a given time. Many modern applications, especially those involving the Internet of Things (IoT), generate enormous amounts of data.

Consider a factory floor with hundreds of sensors monitoring equipment temperature, vibration, and output. Or think about multiple high-definition video cameras used for security surveillance. Sending all this raw data continuously to the cloud consumes significant bandwidth.

This can be expensive, especially over cellular or satellite connections. It can also clog the network, slowing down other critical communications. Edge computing helps by processing data locally and only sending essential summaries, alerts, or processed results to the cloud, drastically reducing bandwidth needs.

Real-time Requirements

Some applications demand immediate analysis and response, often termed real-time processing. This means decisions must be made in fractions of a second, right where the data is generated.

Think of a security system detecting an intrusion. It needs to trigger an alarm immediately, not after sending video footage to the cloud for analysis. An Automated Teller Machine (ATM) needs to verify a transaction instantly.

In these cases, relying on a round trip to the cloud introduces unacceptable delays. Edge computing enables local devices or servers to analyze data and act on it instantly, ensuring timely responses for critical functions.

How Does Edge Computing Work? A Simple Breakdown

Edge computing architecture involves distributing parts of the data processing workflow away from the central cloud and closer to the data source. It typically involves several key components working together.

The goal is to handle data intelligently at different points in the network, optimizing for speed, efficiency, and reliability. Here’s a simplified view of the process:

Step 1: Data Generation at the Edge

It starts with devices at the edge generating data. These edge devices can be anything from simple sensors (temperature, motion) and actuators (valves, switches) to complex machines like industrial robots, smartphones, smart cameras, or vehicles.

These devices capture raw information about their environment or operation. This could be temperature readings, video feeds, location coordinates, user inputs, or machine performance metrics.

Step 2: Local Processing & Analysis

Instead of immediately sending all raw data to the cloud, it first goes to nearby edge infrastructure. This could be an edge gateway, which aggregates data from multiple devices, or a more powerful edge server located on-premises (like in a factory or store).

These edge nodes (a general term for edge gateways or servers) perform initial processing, analysis, or filtering. For example, an edge server might analyze video feeds locally to detect specific events, run machine learning models, or aggregate sensor readings. Only relevant or summarized data might proceed further.

Step 3: Selective Cloud Communication

After local processing, only the necessary information is sent to the central cloud data center. This could be summarized reports, critical alerts requiring further analysis, data needed for long-term storage, or information required for training larger AI models.

This selective communication drastically reduces the load on the network and the cloud. The cloud can then focus on large-scale analytics, long-term data storage, and coordinating multiple edge locations, while the edge handles immediate, localized tasks.

(Conceptual Flow: Device -> Edge Gateway/Server (Local Processing) -> Cloud (Centralized Storage/Analysis))

Key Benefits of Edge Computing

By processing data closer to the source, edge computing delivers several significant advantages over relying solely on a centralized cloud model. These benefits drive its adoption across various industries.

Organizations implementing edge strategies often see improvements in speed, cost, security, and operational resilience. Let’s explore the primary benefits:

Benefit 1: Speed Boost – Reducing Latency

This is often the most cited benefit. Edge computing dramatically reduces latency by minimizing the physical distance data travels. Processing occurs near the source, leading to much faster response times.

As discussed earlier, this is crucial for applications needing real-time or near-real-time performance. Examples include industrial automation, autonomous systems, AR/VR, and online gaming, where delays directly impact functionality and user experience. Lower latency means quicker insights and faster actions.

Benefit 2: Saving Bandwidth (and Costs)

Transmitting massive amounts of raw data from potentially thousands of edge devices to the cloud is costly and inefficient. Edge computing conserves bandwidth by processing data locally and sending only essential information upstream.

This significantly reduces data transmission costs, especially over metered connections like cellular. It also frees up network capacity for other critical communications, preventing congestion and improving overall network performance. This cost-effectiveness is a major driver for edge adoption.

Benefit 3: Enhancing Security and Privacy

Sending sensitive data across networks to the cloud increases exposure to potential interception or cyberattacks. Processing data locally on edge devices or servers can enhance security and privacy.

Sensitive information, like personal health data from wearables or proprietary manufacturing data, can be analyzed and anonymized at the edge before anything is transmitted. This reduces the attack surface and helps comply with data privacy regulations (like GDPR or HIPAA) by keeping data localized. However, securing the distributed edge devices themselves presents new challenges.

Benefit 4: Improving Reliability & Autonomous Operation

What happens to cloud-dependent devices when the internet connection drops? They often stop working. Edge computing allows devices and applications to continue operating autonomously even if their connection to the cloud is interrupted.

Because processing logic resides locally, critical functions can continue without constant cloud communication. A smart factory can keep running, or a remote monitoring system can continue collecting data, even during network outages. This improves reliability and resilience, especially in remote or unstable network environments.

Benefit 5: Enabling Real-Time Insights

Edge computing facilitates real-time analytics by processing data as soon as it’s generated. Organizations can gain immediate insights and react instantly to changing conditions without waiting for data to travel to the cloud and back.

For example, retailers can analyze shopper behavior in real-time within a store to personalize offers. Manufacturers can detect potential equipment failures instantly through localized sensor data analysis, enabling predictive maintenance and preventing costly downtime.

Edge Computing vs. Cloud Computing: What’s the Difference?

It’s a common question: Is edge computing replacing the cloud? The simple answer is no. They are distinct but often complementary technologies. Understanding their differences helps clarify their respective roles.

Think of them not as competitors, but as partners in a modern, distributed IT infrastructure. Here’s a breakdown of key differences:

Where Data is Processed

- Cloud Computing: Data is processed in large, centralized data centers, often located far from the end-user or device.

- Edge Computing: Data is processed locally, near the source – on the device itself or on nearby gateways or servers.

Latency & Speed

- Cloud Computing: Generally higher latency due to the distance data must travel. Suitable for tasks tolerant of some delay.

- Edge Computing: Significantly lower latency due to close proximity processing. Ideal for real-time or near-real-time applications.

Bandwidth Needs

- Cloud Computing: Can require substantial bandwidth to transfer large volumes of raw data to the data center.

- Edge Computing: Reduces bandwidth consumption by processing data locally and transmitting only essential information.

Not Enemies, but Partners

Crucially, edge and cloud computing often work together. Edge handles immediate, localized tasks requiring speed and efficiency. The cloud provides powerful centralized processing for big data analytics, long-term storage, coordinating multiple edge locations, and training complex AI models using data aggregated from the edge. Many modern applications use a hybrid approach, leveraging the strengths of both.

Real-World Edge Computing Examples and Use Cases

Edge computing isn’t just a theoretical concept; it’s already being used in numerous applications across various industries. These examples illustrate its practical value:

Smart Devices & IoT

Many Internet of Things (IoT) devices leverage edge computing. Your smart speaker processing voice commands locally for faster responses, or a smart thermostat adjusting temperature based on immediate sensor readings without always needing the cloud, are simple examples. Wearable fitness trackers often analyze basic activity data on the device itself.

Manufacturing & Industry 4.0

In smart factories (Industry 4.0), edge computing enables real-time monitoring and control. Sensors on machinery detect potential failures (predictive maintenance) by analyzing vibration or temperature locally, triggering alerts instantly. Edge systems can also perform real-time quality control checks on production lines using cameras and local image processing.

Autonomous Vehicles

Self-driving cars are a prime example of edge computing. They generate vast amounts of data from sensors (cameras, LiDAR, radar). This data must be processed instantly within the vehicle to make critical driving decisions like braking or steering. Relying on the cloud for such decisions would introduce fatal delays.

Healthcare Technology

Edge computing is transforming healthcare. Wearable patient monitors (like ECG or glucose monitors) can analyze vital signs locally and alert doctors or patients to critical issues immediately. In hospitals, edge servers can process medical imaging data faster or support real-time analytics during robotic surgery.

Smart Cities & Traffic Management

In smart cities, edge computing helps manage infrastructure efficiently. Smart traffic lights can analyze local traffic flow using cameras and sensors, adjusting signal timing in real-time to reduce congestion. Edge servers can also process data from environmental sensors or manage utility grids more effectively.

Other Examples

- Retail: Analyzing shopper foot traffic in real-time, managing inventory locally, personalized in-store digital signage.

- Content Delivery Networks (CDNs): While distinct, CDNs use edge principles by caching content on servers closer to users for faster website and video delivery.

- Oil & Gas: Monitoring remote pipeline sensors and equipment in areas with limited connectivity.

The Role of 5G and AI in Edge Computing

Two other major technologies, 5G and Artificial Intelligence (AI), have a strong synergistic relationship with edge computing. They enhance edge capabilities and, in turn, benefit from edge deployment.

5G: The High-Speed Enabler

5G, the fifth generation of cellular network technology, offers significantly higher speeds, greater capacity, and crucially, much lower latency compared to previous generations. This low latency and high bandwidth make 5G an ideal partner for edge computing.

5G provides the fast, reliable connectivity needed to link numerous edge devices and enable rapid communication between edge nodes and regional or cloud data centers when necessary. It unlocks the potential for more sophisticated, data-intensive edge applications, like large-scale IoT deployments or high-fidelity AR/VR experiences.

AI: Bringing Intelligence to the Edge

Artificial Intelligence (AI), particularly Machine Learning (ML) models, can be deployed directly on edge devices or servers. This is often called Edge AI. Instead of sending data to the cloud for AI analysis, the analysis happens locally.

This enables real-time intelligent decision-making at the edge – think of a smart camera performing object recognition locally or industrial equipment using local AI for predictive maintenance. Edge AI benefits from lower latency, reduced bandwidth needs (as raw data isn’t sent to the cloud), and enhanced privacy, as sensitive data can be processed on-device.

Challenges and the Future of Edge Computing

Despite its benefits, edge computing also presents challenges. Managing potentially thousands or millions of distributed edge devices is complex. Ensuring the physical and cyber security of these devices can be difficult, as they increase the potential attack surface. Initial deployment costs and ensuring interoperability between different vendor solutions are also considerations.

However, the trend is clear: edge computing is growing rapidly. As IoT devices proliferate, 5G networks expand, and the demand for real-time applications increases, edge computing will become increasingly integral to our digital infrastructure. It represents a fundamental shift towards more distributed, intelligent, and responsive computing models, working alongside the cloud to power the next wave of digital transformation.

Conclusion: Bringing Computing Closer

In essence, edge computing brings data processing closer to where data is created. It’s a distributed approach designed to overcome the latency, bandwidth, and real-time limitations sometimes faced when relying solely on centralized cloud computing.

By enabling faster responses, saving bandwidth, enhancing security, and improving reliability, edge computing unlocks new possibilities for technologies like IoT, AI, autonomous systems, and much more. It’s not about replacing the cloud, but rather complementing it, creating a more balanced and efficient computing landscape for our increasingly connected world. Understanding edge computing is key to understanding the future of technology.

Just as edge computing prioritizes performance and reliability, having robust underlying infrastructure is essential for many applications. Services like Vietnam VPS provide this foundation, utilizing new-gen dedicated hardware including AMD EPYC Gen 3 processors and fast NVMe U.2 SSDs for high-speed, stable operation via advanced virtualization.