In the ever-evolving landscape of software development, efficiency, portability, and scalability are paramount. Enter Docker, a platform that has revolutionized how applications are built, shipped, and run. But what is Docker? In this guide, we’ll demystify Docker, explore its core concepts, and understand why it has become an indispensable tool for developers and system administrators worldwide.

What is Docker?

Docker is an open-source platform that enables developers to build, deploy, and run applications using containers. Containers package an application and its dependencies into a single, portable unit, ensuring that it runs consistently across different environments.

Key Components of Docker Architecture

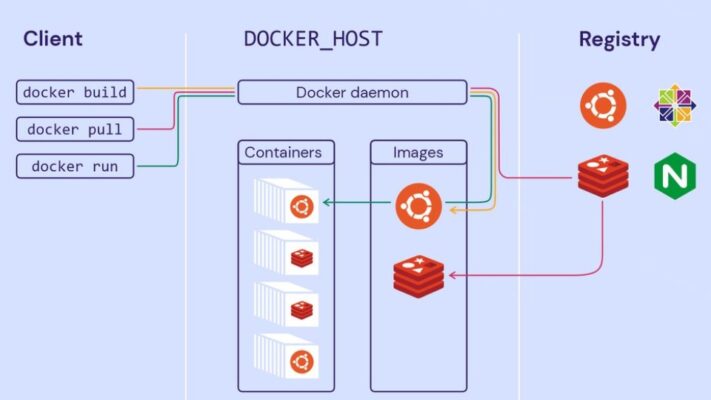

At its core, Docker’s architecture includes the Docker Engine, the Docker Images, and the Docker Containers. These components collaborate to ensure seamless container management and deployment.

Docker Engine

Docker Engine is the heart of Docker’s architecture. It is a client-server application consisting of:

- Docker Daemon (

dockerd): The daemon is responsible for managing Docker containers, images, networks, and volumes. It runs continuously in the background, ensuring containers are created, started, and stopped as needed. - Docker CLI (Command-Line Interface): This allows users to interact with Docker by typing commands in the terminal. The CLI communicates with the Docker daemon to execute tasks like building images, running containers, and managing containers.

- REST API: The API allows programs and scripts to interact with the Docker daemon remotely. It provides an interface to automate Docker operations programmatically.

Docker Images and Containers

Docker uses images as the blueprint for creating containers. Here’s how they work together:

- Docker Images: An image is a read-only template that includes everything an application needs to run, such as code, libraries, and dependencies. Docker images are the building blocks for containers.

- Docker Containers: Once an image is executed, it becomes a container. Containers are instances of images and provide isolated environments where applications run. They are lightweight and efficient compared to traditional virtual machines.

Docker Registries

Docker Hub and other registries allow users to store and distribute Docker images. Docker Hub is the default public registry, where millions of pre-built images are available for download. Developers can upload their custom images as well, enabling easy sharing across teams or the community.

Docker Networking

Docker’s networking model is another important part of its architecture. It enables containers to communicate with each other and with the outside world. Docker provides several networking modes, including:

- Bridge Network: This is the default network mode for containers, providing basic networking between containers on the same host.

- Host Network: Containers share the host’s networking namespace, enabling faster communication with the host.

- Overlay Network: Used in Docker Swarm to manage multi-host container networking, allowing containers on different machines to communicate securely.

Docker Volumes

Docker Volumes provide a way to persist data outside of the container’s filesystem. Since containers are designed to be stateless, data created inside a container would be lost if the container is removed. Volumes allow for persistent storage, making it possible to retain important data between container restarts or even after container deletion.

How does Docker work?

Docker works by using the host operating system’s kernel to create isolated environments called containers. These containers package an application and its dependencies, ensuring consistent operation across different environments. It leverages key Linux kernel features for isolation.

The Building Blocks

The process starts with a Dockerfile. This is a plain text file that acts like a blueprint. It contains a series of instructions that specify the base operating system (e.g., Ubuntu, Alpine Linux), install necessary software packages, copy application code, and configure the environment. Think of it as a recipe for creating a consistent, reproducible environment.

Next, the Docker Engine takes this Dockerfile and uses it to build a Docker image. This image is a read-only template – a snapshot of the application and its dependencies at a specific point in time. Images are built in layers, with each instruction in the Dockerfile creating a new layer. This layering makes images efficient to store and transfer, as only changed layers need to be downloaded when updating.

These images are often stored in a registry, with Docker Hub being the most popular and largest public registry. A registry is like a central library for Docker images. You can pull images from a registry (like downloading a pre-built application) or push your own custom-built images to share them with others.

From Image to Container

The magic truly happens when you run a Docker image. This creates a Docker container. A container is a running instance of an image – it’s where the application actually executes. It’s isolated from the host operating system and other containers, although it shares the host’s kernel. This isolation is crucial for security and consistency.

For example, imagine you have a Python web application that requires specific versions of Python and various libraries. Instead of installing these directly onto your server (and potentially causing conflicts with other applications), you can create a Docker image that includes everything the application needs. When you run this image, Docker creates a container where the application can run in its own isolated environment, without affecting anything else on your system.

Key Technologies: The Inner Workings

Docker’s functionality relies heavily on several underlying Linux kernel features, primarily namespaces and control groups (cgroups).

- Namespaces: Provide isolation. Different namespaces isolate different aspects of the system, such as the process tree (PID namespace), network interfaces (network namespace), and file system mounts (mount namespace). This ensures that processes within a container can’t see or interfere with processes in other containers or on the host system.

- Control Groups (cgroups): Limit and manage resource usage. Cgroups allow you to allocate specific amounts of CPU, memory, and I/O to each container. This prevents any single container from consuming all the available resources and impacting other containers or the host system.

These technologies, combined, provide the lightweight, isolated environment that makes Docker containers so efficient.

Orchestrating Multiple Containers

While running a single container is useful, many applications are composed of multiple interacting services. Docker Compose is a tool that simplifies the management of multi-container applications. You define the services, networks, and volumes in a YAML file ( docker-compose.yml), and Docker Compose handles the creation, configuration, and linking of the containers.

For instance, a web application might consist of a web server container (e.g., Nginx), an application server container (e.g., Node.js), and a database container (e.g., PostgreSQL). Docker Compose allows you to define these services and their relationships, ensuring they can communicate with each other.

Benefits of Using Docker

Docker offers numerous benefits, primarily consistency, isolation, portability, scalability, and efficiency. These advantages stem from its containerization technology, revolutionizing how applications are developed, deployed, and managed.

Consistent Environments

Docker eliminates the “it works on my machine” problem. By packaging an application and all its dependencies into a container, Docker ensures that it runs the same way regardless of the underlying infrastructure. This is a game-changer for development teams, as it removes inconsistencies between development, testing, and production environments. Whether you’re running on a developer’s laptop, a test server, or a cloud provider, the application behaves identically.

Isolation and Security

Docker containers provide a high degree of isolation. Each container runs in its own isolated environment, separated from other containers and the host operating system. This isolation enhances security by limiting the impact of potential vulnerabilities. If one container is compromised, it doesn’t directly affect other containers or the host system. This is crucial for running multiple applications on the same server without interference.

Portability Across Platforms

Docker containers are highly portable. You can easily move containers between different environments – from a developer’s laptop to a staging server, to a cloud provider like AWS, Azure, or Google Cloud – without any code changes. This portability simplifies deployment and allows for greater flexibility in choosing infrastructure. For example, a company could develop an application on-premises and then seamlessly deploy it to a cloud provider for scalability.

Scalability and Resource Efficiency

Docker facilitates easy scaling of applications. Because containers are lightweight and start quickly, you can rapidly spin up multiple instances of a container to handle increased load. This is particularly useful for web applications and microservices that need to scale dynamically based on demand. Docker also utilizes server resources efficiently, minimizing costs.

Furthermore, Docker’s approach is considerably more resource-efficient than traditional virtual machines. Containers share the host operating system’s kernel, avoiding the overhead of running a full operating system for each application instance. This means you can run more applications on the same hardware, reducing infrastructure costs.

Speed and Efficiency

The container system offers greatly increased speed, with containers being created and started in seconds. This contrasts starkly with virtual machines that require a considerably larger amount of time.

Version Control and Rollbacks

Docker images support versioning. This allows you to track changes to your application and its environment over time. If a new version of your application introduces a bug, you can easily roll back to a previous, stable version of the image.

This simplifies debugging and reduces the risk of deploying faulty updates. Imagine being able to instantly revert to a previous version of your entire application environment with a single command – that’s the power of Docker’s version control.

DevOps and Continuous Integration/Continuous Delivery (CI/CD)

Docker is a cornerstone of modern DevOps practices. It seamlessly integrates into CI/CD pipelines, enabling automated building, testing, and deployment of applications. For instance, a code change can automatically trigger the creation of a new Docker image, which is then tested and, if successful, deployed to production. This automation speeds up the development process and reduces the risk of manual errors.

Microservices Architecture

Docker is perfectly suited for building and managing microservices. Microservices are small, independent services that make up a larger application. Each microservice can be packaged in its own Docker container, allowing them to be developed, deployed, and scaled independently.

This architectural style promotes agility and resilience. For example, an e-commerce platform might use separate Docker containers for the user authentication service, the product catalog service, and the payment processing service.

Real-World Impact

Companies of all sizes, from startups to large enterprises, are using Docker to improve their software development and deployment processes. Major cloud providers offer extensive support for Docker, and it has become a fundamental skill for many software engineering and DevOps roles. The widespread adoption of Docker is a testament to its practical benefits and transformative impact on the software industry.

Docker vs. LXC

Docker and LXC are both containerization technologies, but Docker is an application-focused platform, while LXC is more focused on system-level virtualization. Docker builds upon LXC’s foundation, adding layers of functionality for easier application deployment and management. It’s like comparing a ready-to-use toolkit (Docker) to a set of powerful but more basic tools (LXC).

When to Use Which

- Use Docker: When your primary goal is to package, deploy, and manage applications. Docker’s ecosystem and tooling make it the ideal choice for most application development and deployment scenarios.

- Use LXC: When you need to create full system containers, perhaps to run multiple instances of a Linux distribution on a single host, or for situations where you need very fine-grained control over the container environment. LXC is more suitable for tasks that require a more traditional system administration approach within containers.

Notes when using Docker

When using Docker, prioritize security, understand resource management, and master the core concepts of images, containers, and networking. These key areas are essential for successful and efficient Docker usage, avoiding common pitfalls, and maximizing its benefits. Docker is a powerful tool, but its power comes with responsibilities.

Security is paramount

Always prioritize security when working with Docker. As covered previously, running containers as non-root users, using minimal base images, scanning images for vulnerabilities, and restricting network access are crucial best practices. Never assume that containers are inherently secure.

Treat them as potentially vulnerable and take proactive steps to protect your host system and data. Remember, a compromised container can potentially be used as a stepping stone to attack the host or other containers.

Resource management matters

Understand and manage container resource usage (CPU, memory, I/O). Docker allows you to set limits on the resources a container can consume. This prevents a single container from hogging resources and impacting other containers or the host system. Without resource limits, a runaway process within a container could bring down your entire system. Use docker stats to monitor container resource usage in real-time.

Master the core concepts

Thoroughly understand the core concepts of Docker: images, containers, Dockerfiles, and Docker Hub (or other registries). This foundational knowledge is essential for effectively using Docker.

- Images vs. Containers: Remember that images are read-only templates, and containers are running instances of those images.

- Dockerfile Best Practices: Write efficient and maintainable

Dockerfiles. Use multi-stage builds to reduce image size. - Image Tagging: Use meaningful tags for your images to track versions and manage deployments.

Networking needs careful consideration

Docker networking can be complex, so plan your network configuration carefully. By default, containers are isolated from each other and the outside world. Use Docker networks to control communication between containers and expose services to the outside world. Understand the different network drivers (bridge, overlay, host) and choose the appropriate one for your needs. Incorrect network configuration can lead to connectivity issues or security vulnerabilities.

Data Persistence: Volumes and Bind Mounts

Understand how to persist data in Docker. Data stored within a container’s file system is lost when the container is removed. To persist data, use volumes or bind mounts.

- Volumes: Docker-managed storage that is independent of the container’s lifecycle. This is generally the preferred method.

- Bind Mounts: Mount a directory or file from the host system into the container. This is useful for development, but can have security implications.

For example, if you’re running a database in a container, you’ll want to use a volume to store the database files so that the data persists even if the container is stopped or restarted.

Clean up unused resources

Regularly clean up unused Docker resources (images, containers, networks, and volumes). These resources consume disk space and can clutter your system. Use docker system prune to remove unused data, but be careful, as this command is irreversible. You can also use more specific commands like docker image prune, docker container prune, etc.

Use Docker compose for Multi-Container Applications

For applications consisting of multiple services, use Docker Compose. Docker Compose simplifies the management of multi-container applications. You define your services, networks, and volumes in a docker-compose.yml file, and Docker Compose handles the orchestration. This is much easier than manually managing multiple containers with individual docker run commands.

Don’t reinvent the wheel

Leverage existing Docker images and best practices. Before building your own image from scratch, check Docker Hub for official images or well-maintained community images. This can save you time and effort, and often results in more secure and efficient images.

This guide has provided a comprehensive introduction to Docker, covering its core concepts, benefits, inner workings, security considerations, and practical usage notes. We’ve journeyed from understanding the basic definition of Docker as a containerization platform to exploring its advanced features and best practices. You should now have a solid foundational understanding of what Docker is, how it works, and why it’s become such a revolutionary technology in the software development and deployment world.

Consider a Windows VPS from VietNamVPS.net for a powerful and reliable hosting solution. Our VPS offerings feature new generation hardware, including blazing-fast SSD NVMe U.2 storage, ensuring optimal performance for your Dockerized applications. We offer large bandwidth, high-speed connections, and robust configurations at surprisingly affordable prices, all backed by a reputation for quality and reliability.