Ever wondered how multiple virtual machines (VMs) or different operating systems can run simultaneously on just one physical computer or server? The secret ingredient is the virtualization layer. Put simply, the virtualization layer is the crucial software or firmware component that sits between the physical hardware and the virtual machines.

Its main job is to abstract the hardware resources (like CPU, memory, storage) and manage how VMs access them, effectively creating the virtual environment. Think of it as the foundational engine of virtualization technology, making much of modern computing possible. Ready to understand exactly what it does and why it matters? Let’s dive in!

What Exactly is a Virtualization Layer?

The virtualization layer is fundamentally a level of software or firmware that decouples the virtual machines from the underlying physical hardware. It acts as a sophisticated intermediary, translating requests and managing resources efficiently between the two distinct worlds: physical and virtual.

This layer essentially creates a uniform, abstract view of the hardware for the virtual machines running above it. This means the VMs don’t need to know the specific details of the physical CPU, memory chips, or storage devices they are ultimately using.

Think of the virtualization layer like a universal remote control for your home entertainment system. Your remote (the virtualization layer) understands how to talk to your specific TV, soundbar, and streaming box (the hardware), presenting you with a simple interface (the virtual environment) to control everything easily.

The Core Concept: Hardware Abstraction Explained

At the heart of the virtualization layer is the principle of Hardware Abstraction. A Hardware Abstraction Layer, or HAL, is a standard concept in computing. It’s a layer of software designed to hide the differences in hardware from the operating system or applications running above it.

The virtualization layer performs this abstraction specifically for virtual machines. It takes the diverse physical components of a server—CPU, RAM, network cards, disk controllers—and presents them as standardized virtual devices to each VM. This ensures compatibility and simplifies management.

The significant benefit of this abstraction is achieving hardware independence for the virtual machines. A VM created on one server with specific hardware can often be moved and run on another server with different hardware, provided the virtualization layer is compatible. This offers tremendous flexibility.

For example, imagine needing to run an older application that only works on Windows Server 2008. Modern physical servers might lack compatible drivers. Using a virtualization layer, you can create a VM, install Windows Server 2008 inside it, and run it reliably on brand-new hardware.

Key Functions: What Does the Virtualization Layer Do?

The virtualization layer isn’t just a passive abstraction; it actively performs several critical functions. Its primary responsibilities include creating and managing VMs, pooling and allocating hardware resources fairly, ensuring strong isolation between VMs, and enabling advanced management features for the virtual infrastructure.

Creating and Managing Virtual Machines (VMs)

First, let’s clarify: a Virtual Machine (VM) is a software-based emulation of a physical computer. It runs its own operating system (like Windows or Linux) and applications, just like a real machine, but shares the physical hardware resources managed by the virtualization layer.

The virtualization layer is responsible for the entire lifecycle of these VMs. It handles the creation process, where you define the virtual hardware specifications for a new VM—how many virtual CPUs (vCPUs), how much virtual RAM, and the size of virtual disk storage it will have.

Beyond creation, it manages the state of VMs: starting them up (booting the guest OS), shutting them down gracefully, pausing them to temporarily freeze their state, and resuming them later. This control is fundamental to operating a virtualized environment effectively and efficiently.

Consider a software development team. A developer might use the virtualization layer (via management tools) to quickly create several VMs. One VM could run Linux for backend development, another Windows for testing the user interface, all managed centrally on a single physical machine or server.

Hardware Resource Pooling and Fair Allocation

A core function is Resource Pooling. The virtualization layer aggregates the physical hardware resources of the server—like CPU cores, gigabytes of RAM, storage capacity, and network bandwidth—into shared pools. These pools can then be dynamically allocated to the VMs as needed.

For Central Processing Unit (CPU) resources, the layer schedules time slices for each VM’s virtual CPUs (vCPUs) on the physical CPU cores. This ensures multiple VMs can execute their instructions concurrently, even if the number of vCPUs exceeds the physical cores available, through techniques like time-sharing.

Memory (RAM) management is also crucial. The layer allocates physical RAM to VMs. Advanced techniques like memory overcommit (allocating more virtual RAM than physical RAM, relying on VMs not using all allocated RAM simultaneously) and ballooning (reclaiming unused memory from VMs) are often employed for efficiency.

Regarding Storage, the virtualization layer manages access to physical storage devices (like Hard Disk Drives or Solid State Drives). It presents virtual disks to the VMs, which appear as local drives within the Guest OS, but are typically files (like VMDK or VHD files) on a shared storage system.

Network access is managed through virtual Network Interface Cards (vNICs) presented to each VM. The virtualization layer often includes a virtual switch (vSwitch) function to handle network traffic between VMs and connect them to the physical network infrastructure, similar to how a physical switch connects physical devices.

Fair allocation ensures that demanding VMs don’t starve less intensive but still important VMs of necessary resources. Policies can often be set to guarantee minimum resource levels or assign priorities. For instance, a critical production database VM might be guaranteed more CPU resources than a temporary test VM.

Ensuring Isolation and Security Between VMs

Isolation is a paramount function provided by the virtualization layer. It ensures that each virtual machine operates independently, unaware of other VMs running on the same physical hardware. They exist within their own secure containers, managed by the layer.

This isolation prevents a crash or security compromise in one VM from affecting others on the same host. The virtualization layer strictly controls memory access, ensuring one VM cannot read or write to the memory space allocated to another VM. Storage and network isolation are similarly enforced.

Think of the virtualization layer as constructing secure walls between apartments in a building. Each apartment (VM) has its own resources and boundaries, and tenants cannot normally access their neighbors’ space. The building superintendent (virtualization layer) manages the shared resources like plumbing and electricity.

A practical example is a web hosting provider. They use a virtualization layer to host websites for hundreds of different customers on shared servers. Strong VM isolation is critical to prevent one customer’s website vulnerability from impacting any other customer’s site hosted on that same hardware.

While providing strong isolation, it’s important to note that the virtualization layer itself (the hypervisor) can be a potential target. Security researchers constantly analyze hypervisors for vulnerabilities, and vendors release patches. Maintaining hypervisor security is vital for the integrity of the entire virtual environment.

Enabling Advanced Features like VM Mobility

The abstraction provided by the virtualization layer enables powerful features like VM Mobility. This refers to the ability to move a running virtual machine from one physical host server to another without interrupting its operation or disconnecting users – often called live migration.

Technologies like VMware’s vMotion or Hyper-V’s Live Migration rely heavily on the virtualization layer. Because the VM interacts with abstracted virtual hardware, not specific physical devices, the layer can transfer the VM’s active memory state and execution control to a compatible host seamlessly.

Imagine a scenario where a physical server needs urgent maintenance or hardware upgrades. Using live migration enabled by the virtualization layer, administrators can move all running VMs off that server to other hosts in the cluster before taking the server offline, achieving zero downtime for the applications running inside those VMs.

How Does the Virtualization Layer Work?

Understanding the basic mechanics of the virtualization layer helps appreciate its role. It operates at a privileged level within the system, orchestrating access to hardware and managing the execution context for each virtual machine running above it.

Its Place in the Virtualization Stack (Diagram Recommended)

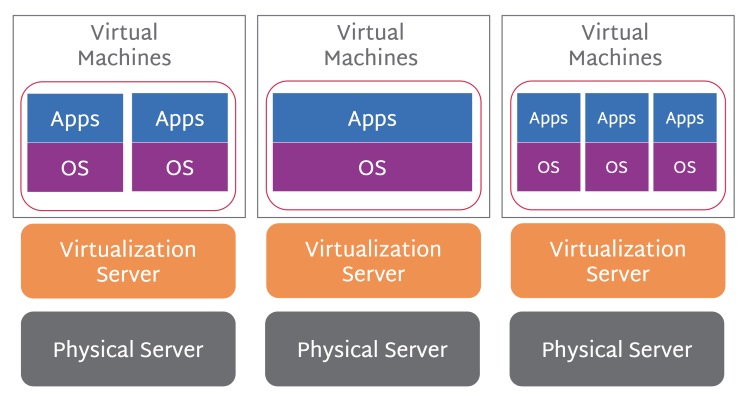

Visualizing the virtualization stack helps clarify the layer’s position. At the very bottom is the physical hardware (CPU, RAM, storage, network). Sitting directly on top of the hardware (in Type 1) or on top of a host operating system (in Type 2) is the virtualization layer, implemented by the hypervisor.

Above the virtualization layer reside the virtual machines. Each VM contains its own complete Guest Operating System (Guest OS) – like Windows or Linux – and the applications running within that OS. The virtualization layer is the critical foundation enabling these VMs to run.

[Note: A diagram here would visually show: Bottom layer – Physical Hardware (Server). Middle layer – Virtualization Layer / Hypervisor. Top layer(s) – Multiple Virtual Machines, each containing a Guest OS and Applications.] This visual representation makes the architecture immediately clear and easy to understand.

Interacting with Physical Hardware Resources

How the layer interacts with hardware depends on its type. A Type 1 hypervisor runs directly on the physical hardware (“bare-metal”) and has direct control over its resources. A Type 2 hypervisor runs as an application on top of a conventional Host Operating System (Host OS), relying on the Host OS for hardware access.

Regardless of type, the virtualization layer operates in a highly privileged CPU execution mode (often referred to as Ring 0 or root mode). This allows it to manage hardware and execute instructions that normal applications (or even Guest OS kernels running in less privileged modes) cannot.

Modern CPUs include specific hardware virtualization extensions, such as Intel VT-x (Virtualization Technology) and AMD-V (AMD Virtualization). The virtualization layer leverages these features extensively to efficiently handle privileged instructions from Guest OSes and manage memory access, reducing software overhead.

Consider a VM needing to write data to disk. The Guest OS issues a disk I/O command. The virtualization layer intercepts this command (as it’s a privileged operation). It then translates the request targeting the VM’s virtual disk controller into a command for the physical disk controller, managing access via the server’s actual hardware drivers.

Handling Requests from Guest Operating Systems

As mentioned, the Guest Operating System (Guest OS) is the OS running inside a virtual machine. From the Guest OS’s perspective, it believes it’s running on real hardware – the virtual hardware presented to it by the virtualization layer.

When the Guest OS needs to perform a privileged operation (like accessing hardware directly or modifying system tables), the virtualization layer intercepts it. If the Guest OS were allowed to execute these directly on the physical hardware, it could destabilize the entire system or interfere with other VMs.

The layer then emulates the expected behavior of the hardware for the Guest OS. It translates the virtual request into an equivalent action on the physical hardware, performs the action, and then returns the result to the Guest OS as if the virtual hardware had performed it directly.

This interception and translation process is constant and fundamental. It’s like having a skilled translator mediating a conversation between two people speaking different languages. The translator (virtualization layer) ensures accurate communication between the Guest OS and the physical hardware, despite their different “languages.”

Virtualization Layer vs. Hypervisor: Clearing the Confusion

A common point of confusion is the relationship between the “virtualization layer” and the “hypervisor.” Are they the same thing? The direct answer is: not exactly, but they are very closely related concepts often used interchangeably.

The Hypervisor, also known as a Virtual Machine Monitor (VMM), is the specific piece of software, firmware, or hardware that creates, runs, and manages virtual machines. It is the actual implementation of the virtualization layer’s functionalities.

Think of it this way: the virtualization layer is the conceptual functionality – the abstraction, the resource management, the isolation. The hypervisor is the concrete product or code that delivers this functionality. You install and run a hypervisor to get a virtualization layer.

Because the hypervisor is the component providing the virtualization layer, people often use the terms synonymously in discussion. Saying “the hypervisor manages resources” and “the virtualization layer manages resources” effectively means the same thing in practical terms.

For example, VMware ESXi is a hypervisor product. When installed on a server, it provides the virtualization layer that allows you to create and manage VMs on that server. The hypervisor is the tool; the virtualization layer is the capability the tool provides.

Understanding the Types (Based on Hypervisor Implementation)

Hypervisors, and thus the virtualization layers they provide, are generally categorized into two main types. This classification is based on whether the hypervisor runs directly on the system hardware or on top of a host operating system. Understanding these types helps clarify deployment scenarios.

Type 1 Virtualization Layers (Bare-Metal Hypervisors)

A Type 1 hypervisor runs directly on the host computer’s physical hardware, without needing a traditional operating system underneath it. It is often called a “bare-metal” hypervisor because it interacts directly with the hardware components like CPU, memory, and storage.

This direct access to hardware generally results in better performance and efficiency compared to Type 2 hypervisors. Type 1 hypervisors are designed for robustness, scalability, and security, making them the standard choice for enterprise data centers and cloud infrastructure providers.

Common use cases include large-scale server consolidation projects, building private clouds, and providing the foundation for public cloud Infrastructure as a Service (IaaS) offerings. They are built for managing many production VMs reliably and efficiently.

Prominent examples of Type 1 hypervisors include VMware ESXi (part of the vSphere suite), Microsoft Hyper-V (when installed as a server role or on Hyper-V Server), KVM (Kernel-based Virtual Machine) which is integrated into the Linux kernel, and the Xen hypervisor.

Type 2 Virtualization Layers (Hosted Hypervisors)

A Type 2 hypervisor, in contrast, runs as a software application on top of an existing conventional operating system, referred to as the Host Operating System (Host OS). The hypervisor relies on the Host OS to handle interactions with the underlying physical hardware.

This architecture makes Type 2 hypervisors generally easier to install and manage, especially on personal computers. They are excellent tools for developers needing to run multiple operating systems, testers evaluating software in different environments, or users wanting to try out a new OS without partitioning their drive.

However, because they have an extra layer (the Host OS) between them and the hardware, Type 2 hypervisors typically introduce more performance overhead compared to Type 1. They are generally not preferred for large-scale, performance-critical server workloads in production data centers.

Common examples of Type 2 hypervisors include VMware Workstation (for Windows/Linux hosts), VMware Fusion (for macOS hosts), Oracle VM VirtualBox (cross-platform), and Parallels Desktop for Mac. These are primarily used on desktop or laptop computers for individual use cases.

Why is the Virtualization Layer So Important? (Key Benefits)

The development and widespread adoption of the virtualization layer have revolutionized IT infrastructure. Its importance stems from a range of significant benefits that impact efficiency, cost, flexibility, and the very foundation of modern services like cloud computing.

Enabling Server Consolidation & Cost Savings

One of the earliest and most compelling benefits is Server Consolidation. Before virtualization, organizations often had numerous physical servers, each dedicated to a single application and often vastly underutilized. The virtualization layer allows running multiple VMs, each with its own OS and application, on a single physical server.

This consolidation drastically reduces the number of physical servers required. Fewer servers mean significant cost savings through reduced hardware acquisition costs, lower power consumption (electricity for running servers and cooling data centers), and less physical space needed in data centers.

For instance, an organization might consolidate ten aging physical servers, each running at only 15% utilization, onto one or two modern physical servers running multiple VMs via a virtualization layer. This can lead to substantial reductions in operational expenditures (OpEx) related to power, cooling, and maintenance.

Maximizing Hardware Resource Utilization

Closely related to consolidation is improved resource utilization. Studies consistently showed that typical physical servers in non-virtualized environments often averaged only 5-20% CPU utilization. This meant expensive hardware assets were mostly idle, representing a poor return on investment.

The virtualization layer allows pooling the resources of a physical server and sharing them among multiple VMs. This enables much higher overall utilization rates, often pushing server utilization into the 60-80% range or even higher, ensuring that the investment in powerful hardware is leveraged much more effectively.

By running multiple workloads concurrently on the same hardware, organizations extract more value from their server investments. This improved Return on Investment (ROI) is a major driver for virtualization adoption across businesses of all sizes, maximizing the use of purchased assets.

Providing Flexibility, Scalability, and Agility

The virtualization layer introduces a level of IT Agility previously unattainable with purely physical infrastructure. Provisioning a new virtual machine can often be done in minutes through software commands, compared to the days or weeks potentially required to procure, install, and configure a new physical server.

Scalability is also greatly enhanced. If an application running in a VM needs more resources (like CPU power or RAM), the virtualization layer often allows administrators to dynamically allocate additional virtual resources to that VM, sometimes without even needing a reboot. This allows applications to scale easily with demand.

Consider an e-commerce website preparing for a major holiday sale. Using virtualization, the IT team can quickly deploy additional web server VMs or increase the resources allocated to existing ones to handle the anticipated traffic surge, then scale back down after the peak period, providing elastic capacity.

Forming the Bedrock of Cloud Computing (IaaS)

Modern cloud computing, particularly Infrastructure as a Service (IaaS), is fundamentally built upon virtualization layer technologies. Major cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) use sophisticated hypervisors at massive scale.

When you launch an EC2 instance on AWS or a Virtual Machine on Azure, you are essentially requesting and running a VM managed by the cloud provider’s underlying virtualization layer on their vast pool of physical hardware. This layer enables the on-demand, self-service provisioning of compute resources that defines the cloud experience.

Without the abstraction, resource pooling, and management capabilities provided by the virtualization layer, the flexible, scalable, and cost-effective models of cloud computing as we know them today would simply not be possible. It’s a truly foundational technology for the cloud era.

Facilitating Easier Development, Testing, and Disaster Recovery

The virtualization layer offers significant advantages for software development and testing workflows. Developers can easily create isolated VM environments to test applications on different operating systems or configurations without needing multiple physical machines. This accelerates development cycles.

Features like Snapshots, often provided by hypervisor management tools, allow capturing the state of a VM at a specific point in time. Testers can install software, run tests, and if something goes wrong or they want to revert, they can instantly restore the VM back to the snapshot state, saving significant time.

For Disaster Recovery (DR), virtualization simplifies planning and execution. Entire VMs (including the OS, applications, and data) can be replicated to a secondary site. In case of a disaster at the primary site, these replicated VMs can be quickly powered on via the virtualization layer at the DR site, minimizing downtime and data loss.

Conclusion: The Unsung Hero Enabling Modern IT

The virtualization layer, most commonly implemented by hypervisors, truly acts as the core engine driving much of modern IT infrastructure. It’s the often unseen but essential component that makes running multiple operating systems and applications on shared hardware possible, efficient, and manageable.

Its key functions—hardware abstraction, resource management, virtual machine creation, and isolation—provide the foundation for server consolidation, drastically improved resource utilization, and unprecedented levels of flexibility and scalability that were previously unimaginable in purely physical environments.

From enterprise data centers optimizing their server footprint to developers spinning up test environments, and from small businesses leveraging cost savings to the massive scale of global cloud providers, the virtualization layer is fundamental. Understanding its role is key to understanding how modern computing infrastructure truly works.