You’ve likely heard the term “snapshot” used frequently in discussions about servers, cloud computing, or virtual machines. So, what exactly is it? A snapshot is essentially a point-in-time capture of a system’s state, preserving it exactly as it was at a specific moment. Think of it like freezing time for your server or data.

Understanding snapshots is crucial in today’s dynamic IT environments. They offer remarkable agility for managing systems, testing changes, and recovering quickly from certain types of issues. They are a fundamental tool used across virtualization platforms like VMware and Hyper-V, cloud services like AWS and Azure, and various storage systems.

This guide provides a clear, comprehensive explanation of snapshots. We’ll explore what they are, how they function under the hood, the different types you’ll encounter, and their primary benefits. We’ll also cover critical distinctions, best practices, and potential risks to ensure you use them effectively and safely.

By the end of this article, you’ll have a solid grasp of snapshot technology. You will understand its role in system administration, development workflows, and data management strategies. Let’s dive into the details and demystify the world of IT snapshots for you.

Defining the Snapshot: What Exactly Is It?

The Core Concept: A Digital Point-in-Time Picture

At its heart, a snapshot captures the exact state of a target system at a specific moment. This “state” typically includes the entire contents of its disk(s) and potentially its system memory (RAM) and configuration settings, particularly for virtual machines. It’s analogous to taking a photograph of your system.

Imagine you’re about to make a significant change, like updating critical software. Taking a snapshot beforehand creates a safety net. If the update causes problems, you can instantly revert the system back to the exact state captured in the snapshot, effectively undoing the change.

This point-in-time nature is the defining characteristic. It doesn’t continuously track changes after it’s taken; rather, it preserves the moment when it was taken. This allows administrators and developers to experiment or update systems with significantly reduced risk of irreversible errors.

The snapshot file itself usually contains information needed to restore this state. It works in conjunction with the original data, which we’ll explore next. The key takeaway is its function as a precise marker of system status at a chosen instant.

How Do Snapshots Technically Work? (Simplified)

Contrary to what some might assume, creating a snapshot usually doesn’t involve copying the entire original disk immediately. Snapshots typically work by preserving the original disk state and recording subsequent changes separately. This makes snapshot creation very fast, often taking only seconds.

Two common underlying mechanisms enable this: Copy-on-Write (CoW) and Redirect-on-Write (RoW). With CoW, when a change is about to be made to an original data block post-snapshot, that original block is first copied to a snapshot storage area before the change is written.

With Redirect-on-Write (RoW), the process differs slightly. When a change occurs after the snapshot, the new data isn’t written over the original block. Instead, it’s redirected and written to a separate snapshot file or location, often called a “delta disk” or “differencing disk.”

In many popular virtualization platforms like VMware vSphere or Microsoft Hyper-V, the RoW approach (using delta/differencing disks) is common. The original virtual disk (the “base disk”) is essentially frozen or made read-only. All new writes are then channeled into this growing delta file.

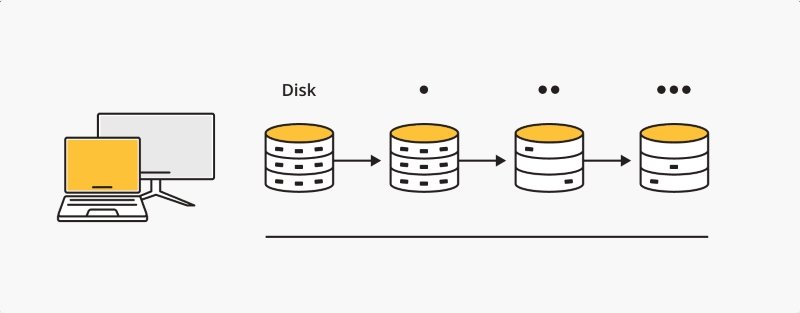

If you take multiple snapshots, you create a “snapshot chain.” Each new delta disk records changes since the previous snapshot. Reading data might require consulting the base disk and one or more delta disks in the chain, which, as we’ll see later, has performance implications.

Exploring the Common Types of Snapshots

Snapshots aren’t a one-size-fits-all concept; they are implemented differently depending on the technology layer. Understanding these types helps you recognize where and how they are used across your IT infrastructure, from individual virtual machines up to large-scale storage arrays.

Virtual Machine (VM) Snapshots

This is perhaps the most commonly encountered type. VM snapshots capture the disk, memory (optional), and configuration state of a virtual machine at a specific moment. Platforms like VMware vSphere, Microsoft Hyper-V, Oracle VirtualBox, and KVM heavily utilize this feature.

Their primary use case revolves around operational tasks for individual VMs. System administrators frequently take VM snapshots before applying operating system patches, installing new software, or making significant configuration changes. If anything goes wrong, they can quickly revert the VM to its pre-snapshot state.

Developers also rely heavily on VM snapshots. They can create checkpoints during complex development or testing phases, allowing them to easily return to a known good state if an experiment fails or code changes introduce bugs. This accelerates development cycles significantly.

Memory snapshots capture the live RAM state, allowing a VM to be restored and resume exactly where it left off. Disk-only snapshots require the VM to be booted up after restoration. Each type has its place depending on the specific need for state preservation.

Storage Snapshots

These snapshots operate at a different level – directly on the storage system itself, such as a Storage Area Network (SAN) or Network Attached Storage (NAS) device. Storage snapshots capture the state of an entire storage volume or Logical Unit Number (LUN) at a point in time.

Major storage vendors like NetApp, Dell EMC, HPE, Pure Storage, and others offer sophisticated snapshot capabilities integrated into their arrays. These are often highly efficient, leveraging the storage system’s internal architecture (like specific CoW or RoW implementations optimized for the hardware).

Creating a storage snapshot usually has minimal performance impact on the connected hosts or VMs during the creation process itself. They are excellent for creating near-instantaneous copies of large volumes, often used as a source for backup software to read from without stunning the live application.

They are also invaluable for instantly creating multiple test/dev copies of production datasets or for rapidly recovering an entire volume if data corruption occurs. Their scope is broader than VM snapshots, focusing on the data container (the volume) itself.

Database Snapshots

Some database management systems offer their own internal snapshot features. For example, Microsoft SQL Server provides database snapshots, which create a static, read-only view of a database as it existed at a specific moment in time.

These snapshots are particularly useful for reporting purposes. You can run complex queries against the read-only snapshot without impacting the performance or locking resources on the live production database. This isolates reporting workloads effectively.

They can also be used for testing schema changes or examining data from a specific past moment without needing to perform a full database restore. Under the hood, they often use page-level Copy-on-Write mechanisms to maintain the point-in-time view efficiently.

It’s important to note these are specific to the database system offering them. They manage database consistency internally but are distinct from VM or storage-level snapshots, although they might sometimes be used in conjunction with them for comprehensive protection strategies.

File System Snapshots

Certain advanced file systems come with built-in snapshot capabilities. Prominent examples include ZFS (commonly used in Solaris, BSD, and Linux via OpenZFS) and Btrfs (available in Linux). File system snapshots capture the state of the file system’s structure and data efficiently.

These file systems often use highly optimized Copy-on-Write techniques. This allows users to create frequent, near-instantaneous snapshots with minimal performance overhead and relatively low storage consumption for incremental changes. It provides granular point-in-time recovery options directly at the file system level.

Users can easily browse files as they existed within a specific snapshot or roll back the entire file system if needed. This is particularly useful on file servers or workstations where users might accidentally delete or modify important files, offering a quick self-service recovery option.

Key Characteristics & Technical Considerations

Understanding how snapshots behave is crucial for using them effectively. Their unique characteristics influence performance, storage usage, and data integrity. Considering these factors helps you avoid common pitfalls and make informed decisions about when and how to leverage snapshot technology.

The Point-in-Time Nature & Crucial Dependency

We’ve established that snapshots capture a specific moment. Crucially, most snapshots depend on the original data (the base disk) and potentially other snapshots in a sequence or “snapshot chain.” They are not independent copies.

Think of delta disks: each one only stores the changes since the previous state. To reconstruct the data as it was at the time of Snapshot 3, the system needs Snapshot 3’s delta, Snapshot 2’s delta, Snapshot 1’s delta, and the original base disk.

This dependency is fundamental. If the base disk gets corrupted or deleted, or if an intermediate delta disk in the chain is lost or damaged, all subsequent snapshots in that chain become unusable. This is a primary reason why snapshots are not suitable for long-term backup.

Understanding this chain and dependency is vital for managing snapshot lifecycles. You must ensure the integrity of the entire chain for any snapshot within it to be valid and restorable. This differs significantly from backups, which are designed to be self-contained.

Performance Impact: What to Expect

While creating snapshots is fast, running a system on snapshots can introduce performance overhead. This occurs because read operations might need to consult multiple files (the base disk plus one or more delta disks) to assemble the current data block.

Write operations also incur overhead. Redirect-on-Write involves metadata updates and writing to the delta disk instead of the base. Copy-on-Write involves reading the original block, writing it to the snapshot area, and then writing the change, adding steps to the I/O path.

The longer the snapshot chain and the more changes made, the greater the potential performance degradation. Input/Output (I/O)-intensive applications, like busy databases or file servers, are typically more sensitive to this overhead compared to less active systems.

Furthermore, the process of deleting a snapshot isn’t just removing a file. It involves “snapshot consolidation,” where data from the delta disk being deleted is merged into its parent (either the base disk or the previous snapshot). This consolidation can be very I/O-intensive and time-consuming.

Consolidation might temporarily “stun” a virtual machine (briefly pause its I/O) in some environments, like older versions of VMware. It’s often recommended to schedule snapshot deletions during periods of low activity to minimize the performance impact on production workloads.

Storage Space Consumption: How Snapshots Grow

Snapshots themselves are initially small, mainly containing metadata. However, the associated delta disks store all the changes made after the snapshot was created. Delta disks grow in size as more data is written or modified on the live system.

It’s a common misconception that snapshots are always small. A long-running snapshot on a system with high data change rates (like a database server or a busy file server) can result in a delta disk growing surprisingly large, sometimes even larger than the original base disk.

This can lead to unexpected storage capacity issues. If the datastore or volume holding the delta disks runs out of space, it can cause the associated VM or application to crash or become unusable. Active monitoring of storage consumption related to snapshots is therefore essential.

Factors like thin vs. thick provisioning of the original disk can also influence perceived space usage, but the core issue remains: changes accumulate in the delta files, consuming physical storage space. Proper planning and cleanup are necessary to prevent storage exhaustion.

Understanding Snapshot Consistency: Data Integrity Levels

When a snapshot captures data “in flight,” the integrity of that data upon restoration is critical. There are different levels of snapshot consistency, impacting how reliably applications and data can be recovered. Understanding these is vital for ensuring data integrity.

The most basic level is “Crash-Consistent.” This captures data on the disk exactly as it was at the moment of the snapshot, similar to abruptly pulling the power cord. The operating system and file system might be intact, but applications might require recovery procedures upon restoration.

A step up is “File-System-Consistent.” This attempts to flush the operating system’s file buffers to disk before taking the snapshot. It ensures the file system structure itself is consistent, reducing the risk of file system corruption upon restore, but doesn’t guarantee application data state.

The highest level is “Application-Consistent.” This requires coordination with the applications running on the system. Technologies like Microsoft’s Volume Shadow Copy Service (VSS) or specific vendor agents temporarily “quiesce” applications, ensuring transactions are completed and buffers are flushed to disk before the snapshot.

Application-consistent snapshots are crucial for transactional applications like databases (SQL Server, Oracle) or messaging systems (Exchange). Restoring from an application-consistent snapshot ensures the application starts cleanly without data corruption or requiring lengthy recovery processes. Always aim for this level when snapshotting critical application servers.

Why Use Snapshots? Top Benefits & Practical Use Cases

Snapshots provide significant operational advantages when used appropriately. Their ability to capture and revert to specific points in time enables safer system management, faster development cycles, and rapid recovery from certain types of errors. Let’s explore the most common benefits and use cases.

- Benefit/Use Case 1: Safe System Updates & Patch Management: This is a prime use case. Take a snapshot right before applying OS patches or major application updates. If the update causes instability or unexpected issues, you can quickly revert to the pre-update state in minutes, minimizing downtime and troubleshooting effort.

- Benefit/Use Case 2: Risk-Free Software Testing & Configuration Trials: Need to test a new software package or a complex configuration change? Create a snapshot, perform the test, and evaluate the results. If it works, great! If not, simply revert to the snapshot to instantly undo all changes without complex uninstall procedures.

- Benefit/Use Case 3: Development & Testing Checkpoints: Developers can create snapshots at key stages of their workflow. This allows them to experiment with new code branches or features. If they head down a wrong path, they can easily discard changes by reverting to a previous checkpoint, saving significant time and effort.

- Benefit/Use Case 4: Creating Temporary Data Freezes for Consistent Backups: Some backup strategies leverage snapshots. Backup software might trigger an application-consistent snapshot, creating a static, reliable point-in-time view of the data. The backup software then reads from this static snapshot, avoiding issues with backing up open files or changing data.

- Benefit/Use Case 5: Rapid Recovery from Accidental Changes or Errors: A user accidentally deleted critical configuration files? A faulty script corrupted application data? If a recent snapshot exists, reverting provides near-instant recovery to the state just before the error occurred, much faster than a traditional full backup restore might be.

Snapshot vs. Backup: Understanding the CRITICAL Difference

This is one of the most vital concepts to grasp, yet often misunderstood. While both snapshots and backups relate to data protection, they serve fundamentally different purposes and have different characteristics. Confusing them can lead to inadequate data protection strategies and potential data loss. Snapshots are absolutely NOT backups.

Core Purpose & Ideal Lifespan

Snapshots are designed for short-term operational recovery. Their primary goal is to provide a quick rollback mechanism for recent changes, testing, or development. Their ideal lifespan is measured in hours or days, just long enough to validate a change or complete a short-term task.

Backups, conversely, are designed for long-term data retention and disaster recovery. They create independent copies of data intended to be kept for weeks, months, or years. Their purpose is to recover data after major failures, including primary storage loss, site disasters, or long-past corruption events.

Data Structure & Dependency

As we’ve discussed, snapshots are typically dependent on the original base disk and potentially other snapshots in a chain. They often store only the changes since the previous state. This makes them storage-efficient initially but creates fragility – the entire chain must be intact.

Backups aim to be independent and self-contained. A full backup contains everything needed to restore, independent of the original system. Even incremental backups, while referencing previous backups, are designed within a system that ensures restorability even if the live system is gone.

Location & Risk Mitigation

Snapshots usually reside on the same storage system as the original data. This means if the primary storage system fails (hardware malfunction, array corruption, physical damage), the snapshots residing on it are also lost. They offer zero protection against storage system failure.

Backup best practices dictate storing backups separately from the primary system, ideally following the 3-2-1 rule (3 copies, 2 different media, 1 off-site). This separation ensures that a failure affecting the primary data does not also destroy the backup copies, providing true disaster recovery capability.

Comparison Table

To summarize the key distinctions:

| Feature | Snapshot | Backup |

|---|---|---|

| Purpose | Short-term rollback, testing | Long-term retention, disaster recovery (DR) |

| Dependency | Dependent on original/chain | Independent, self-contained |

| Data | Often stores changes (deltas) | Full or incremental copies of actual data |

| Location | Typically same storage as original | Ideally separate storage, off-site |

| Retention | Short (hours/days) | Long (weeks/months/years) |

| Recovery | Fast rollback to recent state | Slower restore, but resilient |

| Risk Scope | Vulnerable to primary storage failure | Protects against primary storage failure |

Treating snapshots as a replacement for a robust backup strategy is a dangerous mistake. Use each tool for its intended purpose within a comprehensive data protection plan.

Best Practices: Managing Snapshots Effectively & Safely

To harness the power of snapshots without falling victim to their potential downsides, adhering to established best practices is essential. These guidelines, recommended by vendors and experienced professionals, help ensure stability, manage resources, and maintain data integrity. Think of them as the rules of the road for snapshot usage.

- Keep Snapshot Lifetimes SHORT: This is the golden rule. Snapshots should exist only as long as strictly necessary. Once the reason for the snapshot is gone (e.g., patch validated, test completed), delete it promptly. Aim for hours or days, resist letting them linger for weeks or months.

- Limit the Number and Depth of Snapshot Chains: Avoid creating numerous snapshots for a single VM or volume. Each snapshot adds complexity and potential performance overhead. Most vendors recommend keeping chains shallow, ideally no more than 2-3 snapshots deep, for optimal performance and easier management.

- Monitor Storage Consumption Actively: Keep a close eye on the storage space consumed by snapshot delta files. Use your virtualization platform or storage system’s monitoring tools. Set up alerts for datastore usage to proactively prevent snapshots from filling up available space and causing outages.

- Schedule & Monitor Snapshot Deletion/Consolidation: Delete snapshots promptly, but be aware that consolidation takes time and resources. Schedule deletions during low-activity periods if possible. Crucially, monitor that the consolidation process completes successfully; failures can occur and require manual intervention.

- Choose the Right Consistency Level for the Workload: Don’t just blindly create snapshots. Understand the needs of the application. Always use application-consistent snapshots for databases and other transactional applications to ensure data integrity upon restoration. Use file-system consistent or crash-consistent only when appropriate.

- Crucially: Do Not Rely Solely on Snapshots for Backup: Reiterate this vital point. Snapshots complement, but do not replace, a proper backup strategy involving regular, independent, and preferably off-site copies of your critical data. Ensure you have a robust backup system in place.

- Document Snapshot Purpose and Planned Removal: When you create a snapshot, document why it was created, who created it, and when it is scheduled for removal. Use the description fields provided by the platform. This prevents “orphaned” snapshots that linger indefinitely because no one remembers why they exist.

Potential Risks & Limitations to Consider

While highly useful, snapshots are not without risks and limitations. Being aware of these potential downsides allows for proactive mitigation and helps set realistic expectations about what snapshots can and cannot do. Ignoring these risks can lead to performance issues, storage problems, or even data loss.

- Performance Degradation Concerns: As detailed earlier, running systems on snapshots, especially deep chains or under heavy I/O load, can significantly slow down application performance. Reads and writes become less efficient. Consolidation processes can also cause temporary performance dips or stuns.

- Unexpected Storage Consumption: Delta files can grow much larger than anticipated if snapshots are kept for long periods or if the system undergoes many changes. This risk of running out of storage space is a major operational concern that requires constant vigilance and monitoring.

- Data Loss if Primary Storage Fails / Chain Breaks: Because snapshots depend on the base disk and the integrity of the chain, they offer no protection against underlying storage failure. If the storage hosting the VM/volume fails, the snapshots are lost too. Corruption within the chain can also render snapshots useless.

- Snapshot Consolidation Failures & Complexity: The process of merging snapshot data back (consolidation) can sometimes fail, especially in complex environments or if issues like file locking occur. Failed consolidations often require manual cleanup, which can be complex and potentially disruptive.

- Management Challenges at Scale: In large environments with hundreds or thousands of VMs, tracking and managing snapshot lifecycles manually becomes difficult. Lack of automation or clear policies can lead to snapshot sprawl, consuming resources and increasing risk. Proper governance and automation tools are key.

Conclusion: Leveraging Snapshots Wisely

Snapshots are undeniably a powerful tool in the modern IT administrator’s and developer’s arsenal. They provide remarkable flexibility for managing system changes, testing updates, and recovering quickly from operational errors. Their speed and ease of use offer significant advantages for short-term tasks.

However, their power comes with responsibility. The key to leveraging snapshots effectively lies in understanding their fundamental nature: they are point-in-time, dependent captures, not independent backups. Recognizing their limitations regarding performance, storage, and dependency is crucial for avoiding common pitfalls.

By adhering to best practices—keeping snapshots short-lived, monitoring resources, ensuring consistency, limiting chain depth, and crucially, maintaining a separate, robust backup strategy—you can harness the benefits of snapshots while mitigating the risks. Always consult your specific platform’s documentation (VMware, Hyper-V, AWS, Azure, your storage vendor) for detailed guidance.

Used wisely, snapshots enhance operational agility and reduce the risk associated with necessary system changes. Used improperly, they can introduce performance bottlenecks, storage crises, and a false sense of security. Integrate them thoughtfully into your workflows and data protection policies.

Frequently Asked Questions (FAQ) about Snapshots

- Q1: Can snapshots replace my backups? Absolutely not. Snapshots depend on the original data and reside on the same storage, offering no protection against primary storage failure or disaster. Backups create independent copies stored separately for long-term retention and true disaster recovery. Use both appropriately.

- Q2: How long is it safe to keep a snapshot? Keep snapshots for the shortest time possible, ideally measured in hours or a few days at most. Delete them as soon as the reason for their creation (e.g., patch testing, development task) is complete. Long-running snapshots increase risks related to performance and storage space.

- Q3: Do snapshots impact my system’s performance? Yes, they can. Running on snapshots often introduces performance overhead, especially for I/O-intensive applications or when snapshot chains are deep. The process of deleting/consolidating snapshots can also be resource-intensive. Monitor performance and keep snapshot usage brief.

- Q4: What happens when I delete a snapshot? Deleting a snapshot initiates a consolidation process. The data changes stored in the snapshot’s delta file are merged into the parent disk (either the previous snapshot or the base disk). This process can take time and consume significant I/O resources.

- Q5: Are snapshots safe for databases? They can be, but only if you use application-consistent snapshots. These coordinate with the database (e.g., via VSS) to ensure data is in a consistent state before capture. Using crash-consistent snapshots for active databases risks corruption upon restoration.