Ever wondered what “containerization” is all about, especially with terms like Docker popping up everywhere? Don’t worry, you’re in the right place! Containerization is a method of packaging software code along with all its necessary components, like libraries and settings, into a single executable unit called a container.

This package can then run smoothly and consistently, almost anywhere – from a developer’s laptop to the cloud. It’s become a cornerstone of modern software development and IT operations. Stick around, and this friendly guide will walk you through exactly what containerization means, how it works its magic, the awesome benefits it brings, how it compares to traditional Virtual Machines (VMs), and some common places you’ll find it being used today. Let’s dive in!

So, What Exactly Is Containerization?

At its core, containerization provides a standard way to package your application’s code, configurations, and dependencies into an isolated ‘container’. Think of it as putting everything your application needs to run into a self-contained box.

This approach solves a common headache in software: an application working perfectly on a developer’s computer but failing when moved to a testing or production environment. Containerization ensures consistency by bundling the application with its specific environment needs.

The concept isn’t entirely new; it evolved from earlier isolation technologies. However, platforms like Docker made it much easier to use, leading to its widespread adoption. It standardizes the packaging and distribution of software applications.

Imagine you’re moving house. Instead of carrying items one by one, you put them in standardized moving boxes. Containers do something similar for software, ensuring all parts arrive and work together correctly, regardless of the destination server or cloud.

This packaging includes the application code itself, runtime environments (like Java or Node.js), system tools, libraries, and configuration settings. Everything needed is inside the container, making the application predictable and reliable wherever the container is deployed.

How Does Containerization Actually Work?

So how does this container magic happen? Containerization works using a form of operating system (OS) virtualization, often called OS-level virtualization. Unlike Virtual Machines (VMs), containers share the host machine’s OS kernel.

Think of the kernel as the core manager of the operating system. Instead of each application needing its own complete OS (like in VMs), containers share the host’s kernel. This makes them much more lightweight and faster to start.

This sharing is possible thanks to clever features built into modern operating systems, particularly Linux. Two key technologies enable container isolation: Namespaces and Control Groups (cgroups). Let’s break those down simply.

Namespaces are like giving each container its own private view of the system. One container can’t see the processes, network interfaces, user IDs, or file systems of another container, even though they run on the same host machine.

For example, a container might think it’s running as the main ‘root’ user with process ID 1, completely unaware of other processes running outside its namespace on the host system. This isolation prevents interference between applications.

Control Groups (cgroups), on the other hand, manage resource allocation. They limit how much CPU power, memory (RAM), disk input/output (I/O), and network bandwidth each container can use. This prevents one greedy container from hogging all resources.

Think of cgroups as setting allowances. You can configure a specific container to use, say, only 1 CPU core and 512MB of RAM, ensuring fair resource distribution among all containers running on the host machine.

The blueprint for a container is called a Container Image. This is a read-only template containing the application code, libraries, and dependencies. It’s often built in layers, making updates efficient as only changed layers need downloading.

When you want to run the application, a Container Engine (like the popular Docker Engine) takes the image and creates a running instance – this running instance is the Container. The engine manages the container’s lifecycle: starting, stopping, and resource management via namespaces and cgroups.

The Container Engine typically includes a Container Runtime (like containerd or CRI-O), which is the low-level component actually responsible for starting and managing the container processes based on the image and engine instructions.

So, containers provide isolated environments by cleverly partitioning system resources and views using namespaces and cgroups, all while sharing the host OS kernel for efficiency. This is fundamentally different from VMs, which virtualize entire hardware stacks.

Why All the Buzz? Key Benefits of Containerization

Containerization has become incredibly popular for several compelling reasons. It offers tangible advantages for developers, operations teams, and businesses. Let’s explore the main benefits that contribute to its widespread adoption in modern IT.

Lightweight & Fast

Containers are significantly more lightweight than Virtual Machines (VMs) because they don’t bundle a full operating system. They share the host OS kernel, drastically reducing their size and resource footprint (memory, disk space).

This lean architecture means containers start almost instantly – typically in seconds or even milliseconds. Compare this to VMs, which need to boot an entire OS and can take several minutes to become operational. This speed dramatically accelerates development and deployment cycles.

Imagine needing to quickly test a small code change. Spinning up a lightweight container is much faster than waiting for a full VM to boot, allowing developers to iterate more rapidly and improve productivity.

Runs Anywhere (Portability)

A container packages an application and its dependencies, ensuring it runs reliably across different computing environments. This solves the classic “it works on my machine” problem faced by developers.

Whether moving from a developer’s laptop to a testing server, or from an on-premises data center to a public cloud (like AWS, Azure, or GCP), the containerized application behaves consistently. The underlying infrastructure differences are abstracted away.

This portability simplifies development workflows and deployment strategies. Teams can build a container image once and deploy that exact same artifact across various stages (development, testing, staging, production) and infrastructures without modification.

For instance, a development team can build and test a containerized application on their local machines. The operations team can then take that same container image and deploy it to production servers or a cloud environment with high confidence it will work identically.

Efficient Resource Use

Because containers share the host OS kernel and have less overhead, you can run many more containers on a single host machine compared to VMs. This leads to significantly better server consolidation and resource utilization.

Instead of dedicating an entire virtual OS to each application instance (as with VMs), containers use resources more granularly. This efficiency translates directly into cost savings, requiring less hardware and reducing infrastructure expenses.

Companies often report substantial improvements in server density after adopting containers. This allows them to maximize their hardware investments or reduce their cloud spending by running more workloads on fewer virtual or physical machines.

Faster Development & Deployment

Containerization streamlines the entire software development lifecycle (SDLC), enabling faster build, test, and release cycles. This agility is a key component of modern DevOps practices and Continuous Integration/Continuous Deployment (CI/CD) pipelines.

Developers can quickly build container images incorporating new code changes. These images can then be automatically tested and deployed through CI/CD pipelines, reducing manual effort and speeding up the time-to-market for new features or fixes.

For example, a CI/CD tool like Jenkins or GitLab CI can automatically build a Docker image when code is pushed, run automated tests within a container based on that image, and then deploy the container to a staging or production environment if tests pass.

This automation and speed allow organizations to release software updates more frequently and reliably. Studies and industry reports often highlight significant reductions in deployment times and frequencies after implementing container-based workflows.

Scalability

Containers make it much easier to scale applications horizontally – meaning adding or removing instances of an application component based on demand. This is particularly beneficial for microservices architectures.

If one part of your application (like the user login service) experiences high traffic, you can quickly launch more containers running just that service. When demand drops, you can automatically remove the extra containers to save resources.

Container orchestrators like Kubernetes automate this scaling process based on metrics like CPU usage or incoming requests. This elastic scalability ensures applications remain responsive under varying loads while optimizing resource consumption.

Consider an e-commerce site during a major sale. An orchestrator can automatically scale up the number of containers handling product Browse and checkout processes to manage the surge in traffic, then scale them back down afterwards.

Improved Consistency

Containerization ensures environmental consistency between development, testing, and production environments. Every container created from the same image is identical, eliminating environment-related bugs and deployment issues.

Developers can be confident that the code they write and test within a container on their laptop will behave the same way when deployed in a container in production. This reduces unexpected failures and simplifies troubleshooting.

This consistency extends to dependencies. The container image locks down specific versions of libraries, runtimes, and tools, preventing conflicts that might arise from different versions being installed in different environments.

Containerization vs. Virtual Machines (VMs): What’s the Difference?

A common point of confusion is how containers differ from Virtual Machines (VMs). While both provide isolated environments for running applications, the fundamental difference lies in their level of abstraction and resource usage. Let’s compare them directly.

Architecture

Containers virtualize the operating system, sharing the host OS kernel. Each container runs as an isolated process in the user space of the host OS. They package only the application and its dependencies.

VMs, conversely, virtualize hardware. Each VM includes a full copy of an operating system (the guest OS), its own virtualized kernel, and virtualized hardware resources (CPU, RAM, network card). They run on top of a Hypervisor (like VMware ESXi or KVM).

Think of it like apartments versus houses. Containers are like apartments in a building (the host OS); they share the building’s foundation (kernel) but have their own isolated living space. VMs are like separate houses, each with its own foundation and utilities.

Resource Usage

Containers are much lighter, consuming fewer resources (CPU, RAM, disk space) than VMs. This is because they don’t need to run a full guest OS for each instance. Sharing the host kernel significantly reduces overhead.

VMs have higher resource overhead due to running a complete OS stack. Each VM requires its own dedicated memory, CPU allocation, and disk space for the OS files, leading to lower density per host machine.

This difference means you can typically run significantly more containers than VMs on the same physical hardware, leading to better server utilization and potential cost savings, as highlighted in the benefits section.

Startup Speed

Containers start almost instantly, usually within seconds or even milliseconds. They only need to start the application process within the already running host OS kernel’s context.

VMs take much longer to start, often minutes. They need to go through the entire boot process of their guest operating system, similar to starting a physical computer.

This speed difference is critical for rapid scaling and fast deployment cycles, making containers highly suitable for dynamic environments and CI/CD workflows where quick startup times are essential.

Density

Host machines can support a much higher density of containers compared to VMs. Due to their lower resource footprint, you can pack many isolated containerized applications onto a single server.

VM density is lower because each VM consumes a substantial chunk of the host’s resources for its guest OS and virtualized hardware.

Higher density translates to needing fewer physical or virtual host machines to run the same number of applications, leading to infrastructure cost savings and better energy efficiency.

Isolation Level

VMs provide strong isolation at the hardware level. Since each VM has its own kernel and OS, issues within one VM (like a kernel panic or security breach) are highly unlikely to affect other VMs on the same host.

Containers offer process-level isolation. While namespaces and cgroups provide good separation, all containers share the same host kernel. A severe vulnerability in the host kernel could potentially impact all containers running on it.

Therefore, VMs are often preferred for scenarios requiring maximum security separation or running applications that need different operating systems or specific kernel versions on the same host. Containers offer sufficient isolation for most application workloads.

Typical Use Cases

Containers excel in scenarios like microservices, web applications, CI/CD pipelines, and packaging individual applications with their dependencies. They are ideal when you need speed, efficiency, and portability across environments.

VMs are often used for running applications requiring a full OS stack, different OS types on one host, legacy applications, or scenarios demanding the highest level of hardware-enforced isolation. They are also used as the underlying infrastructure to host container platforms.

| Feature | Containers | Virtual Machines (VMs) |

|---|---|---|

| Virtualization | OS-Level | Hardware-Level |

| OS Kernel | Shared Host Kernel | Separate Guest Kernel per VM |

| Overhead | Low | High |

| Size | Lightweight (MBs) | Heavyweight (GBs) |

| Startup Time | Fast (Seconds/Milliseconds) | Slow (Minutes) |

| Density | High | Low |

| Isolation | Process-Level | Kernel/Hardware-Level |

| Use Cases | Microservices, Web Apps, CI/CD, Dev/Test | Full OS Isolation, Legacy Apps, Multi-OS |

Ultimately, containers and VMs are not always mutually exclusive. It’s very common to run containers inside VMs to combine the benefits of VM isolation with container flexibility and efficiency.

Where is Containerization Used? Common Examples

Containerization isn’t just a theoretical concept; it’s actively used across various domains in software development and IT operations. Here are some common examples illustrating where containers provide significant value:

Microservices Architecture

Containers are a natural fit for deploying microservices. Microservices break down large, monolithic applications into smaller, independent services (e.g., user authentication, product catalog, shopping cart).

Each microservice can be packaged in its own container with its specific dependencies and runtime environment. This allows services to be developed, deployed, updated, and scaled independently, increasing agility and resilience.

For example, an online streaming service might have separate containerized microservices for user profiles, video streaming, recommendations, and billing. If the recommendation engine needs an update, only its container needs redeploying, minimizing impact on other services.

CI/CD Pipelines

Containers play a crucial role in modern Continuous Integration/Continuous Deployment (CI/CD) pipelines. They provide consistent and reproducible environments for building, testing, and deploying software automatically.

A typical CI/CD workflow might involve:

- Building a container image containing the latest code changes.

- Running automated tests (unit, integration) inside containers created from that image.

- Pushing the validated image to a container registry.

- Deploying the container image to staging or production environments.

This ensures that the build and test environment perfectly matches the deployment environment, reducing errors and accelerating the release process significantly. Developers get faster feedback, and deployments become more reliable.

Web Applications

Deploying web applications and their dependencies (like web servers and databases) using containers is extremely common. It simplifies dependency management and ensures the application runs consistently across different servers.

Imagine deploying a Python web application using the Flask framework. You can create a container image including Python, Flask, necessary libraries (via requirements.txt), and perhaps a web server like Gunicorn. This container can then be deployed anywhere without worrying about Python versions or library conflicts.

Multi-container setups using tools like Docker Compose are also popular for defining and running applications composed of multiple services (e.g., a web front-end container, a backend API container, and a database container) together in development or testing.

Development & Testing Environments

Containers provide developers with consistent, isolated environments that mirror production. This eliminates the “works on my machine” problem and makes collaboration easier.

A developer can quickly spin up a container with the exact database version or specific library needed for a project, without installing it directly on their host machine or conflicting with other projects.

Testing teams can also use containers to create clean, isolated environments for running various tests (performance, security, integration) without interference, ensuring reliable and repeatable test results.

Cloud-Native Applications

Containerization is a foundational technology for building cloud-native applications. These applications are designed to leverage the scalability, resilience, and flexibility offered by cloud computing platforms.

Platforms like Kubernetes provide robust orchestration capabilities for managing containerized applications at scale in the cloud. They automate deployment, scaling, load balancing, and self-healing, making it easier to build and operate complex, distributed systems.

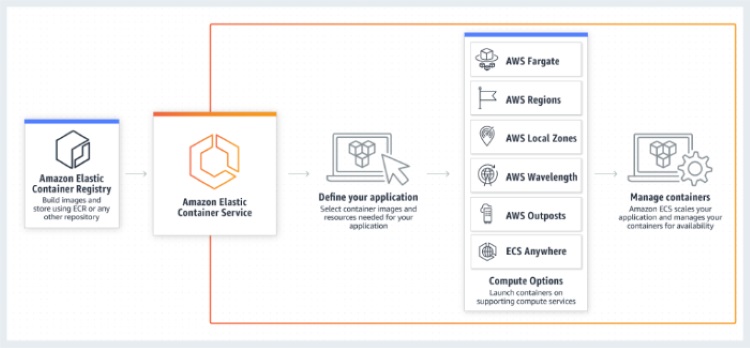

Most major cloud providers (AWS, Azure, GCP) offer managed Kubernetes services (EKS, AKS, GKE) and other container services (like AWS Fargate or Azure Container Instances) that simplify running containers in the cloud without managing the underlying infrastructure.

Migrating Legacy Applications

While not always straightforward, containers can sometimes be used to package and modernize legacy applications. This allows older applications to run more consistently on modern infrastructure or in the cloud without extensive rewriting.

This process, often called “lift and shift,” involves putting the existing application and its dependencies into a container. While it doesn’t magically modernize the code itself, it simplifies deployment and management, potentially serving as a first step towards further modernization efforts.

Popular Tools in the Container World

The container ecosystem is vast, but a few key tools dominate the landscape. Understanding these helps grasp how containerization is implemented in practice. Let’s look at the most prominent ones.

Docker

Docker is arguably the most well-known containerization platform. It provides tools and a platform to build, ship, and run applications inside containers easily. Docker popularized container technology, making it accessible to a broad audience.

Key Docker components include:

- Docker Engine: The underlying client-server application that builds and runs containers.

- Dockerfile: A text file containing instructions to build a Docker image.

- Docker Hub: A cloud-based registry for 1 storing and sharing Docker images.

- Docker Compose: A tool for defining and running multi-container Docker applications.

Docker simplified the process of creating portable application containers, significantly impacting software development and deployment workflows worldwide. When people talk about containers, they often implicitly refer to Docker containers.

Kubernetes (K8s)

Kubernetes, often abbreviated as K8s, is the leading open-source platform for container orchestration. While Docker helps create and run individual containers, Kubernetes manages large numbers of containers across clusters of machines.

Developed originally by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes automates the deployment, scaling, load balancing, self-healing, and management of containerized applications. It’s the de facto standard for running containers in production at scale.

Key Kubernetes concepts include Pods (groups of containers), Services (networking abstraction), Deployments (managing application replicas), and Nodes (worker machines running containers). It handles the complexities of running distributed systems reliably.

Other Important Tools

While Docker and Kubernetes are dominant, other tools play significant roles:

- Podman: An alternative container engine often seen as a direct competitor to Docker, emphasizing daemonless operation.

- containerd / CRI-O: Lower-level container runtimes that Kubernetes often uses under the hood to interact with containers.

- OpenShift: A Kubernetes distribution by Red Hat with additional developer and operational tools built on top.

- Rancher: Another popular platform for managing Kubernetes clusters across different infrastructures.

- Helm: Often called “the package manager for Kubernetes,” simplifying the deployment and management of complex applications on K8s clusters.

Understanding these tools provides context for how organizations implement and manage containerized workloads in real-world scenarios, from single developer machines to large-scale production clusters.

Wrapping Up: Is Containerization Right for You?

So, we’ve journeyed through the world of containerization! We’ve seen that it’s a powerful technology for packaging applications with their dependencies, ensuring they run consistently and reliably across different environments.

We explored how it works using OS-level virtualization (sharing the host kernel via namespaces and cgroups), making containers much lighter and faster than traditional VMs. This efficiency leads to key benefits like portability, better resource utilization, and accelerated development cycles.

We also clarified the crucial differences between containers and VMs, highlighting their respective strengths and ideal use cases. Containers shine for microservices and modern applications, while VMs offer robust hardware isolation.

From streamlining CI/CD pipelines and simplifying web app deployment to enabling cloud-native architectures, containerization, powered by tools like Docker and Kubernetes, has fundamentally changed how software is built, shipped, and run.

If you’re involved in software development or IT operations, understanding containerization is increasingly essential. Its benefits in terms of speed, efficiency, and consistency offer compelling advantages for teams of all sizes.

Hopefully, this friendly guide has demystified containerization for you. It’s a technology shaping the future of software deployment, and exploring tools like Docker is a great next step if you’re ready to try it out!