Ever wonder what makes your computer, smartphone, or server actually work? Deep beneath the shiny applications and user interfaces lies a critical, hidden component. This component works tirelessly, managing everything from your mouse clicks to complex calculations. Think of it as the unsung hero, the master conductor of your digital orchestra.

So, what is this crucial piece of software? It’s called the Kernel. Simply put, the kernel is the absolute core program at the heart of any operating system (OS). It’s the first part of the OS loaded into memory when the system starts, and it stays there, managing everything, until the system shuts down.

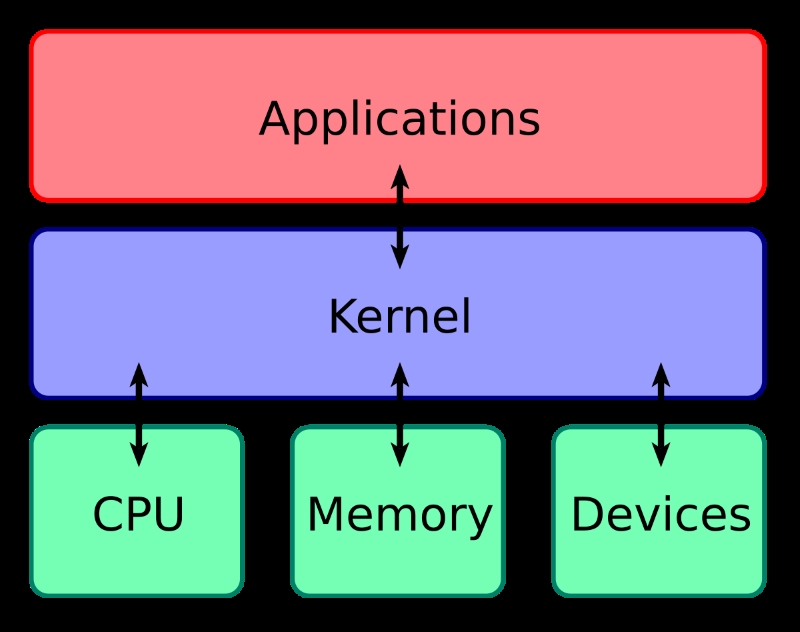

The kernel’s fundamental purpose is to act as the main bridge between your computer’s physical hardware (like the processor, memory, and disk drives) and the software applications you run. Without the kernel, your software wouldn’t be able to communicate with the hardware, and your computer would be little more than an expensive paperweight.

Understanding the kernel is key to understanding how computers operate at a fundamental level. This guide will walk you through exactly what the kernel is, explore its vital functions, examine how it works, look at different types, and explain why it’s so incredibly important. Let’s dive into the heart of your operating system.

What Does an Operating System Kernel Actually Do?

The kernel isn’t just a passive bridge; it’s an active manager juggling numerous critical tasks simultaneously. Its primary role is resource management – ensuring hardware and software work together smoothly and efficiently. Think of it as the central command center for all system operations.

It handles several key responsibilities behind the scenes. These functions ensure that your applications run correctly, share resources fairly, and don’t interfere with each other or the system’s stability. Let’s break down these essential duties one by one.

1. Process Management: The CPU’s Traffic Controller

What is process management? It’s how the kernel controls which programs get to use the Central Processing Unit (CPU), often called the computer’s brain, and for how long. Modern computers run many programs seemingly at once – this is multitasking, made possible by the kernel.

The kernel acts like an incredibly fast traffic controller for the CPU. It uses a component called the scheduler to decide which process (a running program or part of one) gets the CPU’s attention next. This happens thousands or even millions of times per second.

This rapid switching, known as context switching, creates the illusion that multiple programs are running simultaneously. For example, you can browse the web while listening to music and downloading a file. The kernel manages the starting, pausing, resuming, and stopping of all these processes efficiently.

It ensures fair access to the CPU, preventing any single application from monopolizing it. This careful balancing act by the kernel is crucial for a responsive and functional system, allowing multiple applications to share the processor’s power effectively.

2. Memory Management: Allocating System RAM

What is memory management? It’s the kernel’s job to oversee the computer’s main memory, known as Random Access Memory (RAM). RAM is where programs and their data are stored while they are running, allowing for fast access by the CPU. The kernel ensures this limited resource is used efficiently.

The kernel allocates specific chunks of memory to processes when they start or request it. When a process finishes or no longer needs certain memory, the kernel deallocates it, making it available for other processes. This prevents programs from running out of memory unnecessarily.

Furthermore, the kernel manages virtual memory. This clever technique uses disk space to extend the available RAM. It allows the system to run more applications than could fit into physical RAM alone and gives each process its own protected memory space.

This protection ensures one application cannot accidentally (or maliciously) access or corrupt the memory being used by another application or the kernel itself. Effective memory management by the kernel is vital for both performance and stability, preventing crashes and slowdowns.

3. Device Management: Communicating with Hardware

What is device management? This is how the kernel handles communication between the software and all the physical hardware components connected to your computer. These include keyboards, mice, monitors, disk drives, network cards, printers, and graphics cards.

Applications cannot directly “talk” to most hardware. The kernel acts as the necessary intermediary. It uses specialized pieces of software called device drivers to translate generic requests from applications into specific commands that a particular piece of hardware understands.

Think of a device driver as a translator specific to one device model. When you save a file, your application tells the kernel, which then uses the appropriate disk driver to write the data to your hard drive or Solid-State Drive (SSD).

The kernel also handles interrupts. These are signals sent by hardware devices to the CPU to request attention (e.g., when you press a key or receive network data). The kernel processes these interrupts quickly, ensuring the system responds promptly to hardware events. Efficient device management ensures all your peripherals work seamlessly.

4. System Call Interface: Software’s Request Line to the Kernel

What is the system call interface? It’s the specific way applications request services from the kernel. As mentioned, applications running in user space cannot directly perform privileged operations like accessing hardware or managing memory for security and stability reasons.

When an application needs to perform such an operation – like reading data from a file, sending information over the network, creating a new process, or requesting more memory – it must ask the kernel to do it on its behalf. This request is made through a system call.

The system call temporarily transfers control from the application to the kernel. The kernel performs the requested action in its privileged mode, ensuring it’s done safely and correctly, and then returns control (and often a result) back to the application.

This interface acts as a well-defined, secure gateway. It protects the kernel and hardware from direct manipulation by applications while providing applications with the essential services they need to function. It’s fundamental to the operating system’s architecture.

5. Security and Protection: The System’s Guardian

What is the kernel’s role in security? The kernel is a cornerstone of system security, enforcing rules and permissions to protect system integrity and user data. It ensures that processes can only access the resources they are authorized to use.

One primary way it does this is by managing memory protection, ensuring one process cannot interfere with the memory space of another process or the kernel itself. This prevents bugs in one application from crashing the entire system or accessing sensitive data.

The kernel also manages file system permissions, controlling which users and processes can read, write, or execute files. When you try to open a file, the kernel checks if you have the necessary permissions before granting access.

Furthermore, the kernel operates with the highest privilege level, allowing it to control access to hardware and sensitive system operations. By strictly mediating requests via system calls, it prevents unauthorized actions, contributing significantly to the overall security posture of the operating system.

How the Kernel Works: Understanding Kernel Space vs. User Space

To perform its duties securely and reliably, the kernel operates within a protected environment. Operating systems typically divide the system’s memory and CPU operational capabilities into two distinct areas or modes. Understanding this separation is key to grasping how the kernel maintains control.

These two realms are known as Kernel Space and User Space. This fundamental division dictates what software can do and what resources it can access directly. It’s a crucial architectural concept for stability and security in modern operating systems.

Kernel Space (Privileged Territory)

What is Kernel Space? It’s a protected and privileged portion of the system’s memory reserved exclusively for the operating system kernel, its extensions (like some device drivers), and critical system data. Code running here has direct, unrestricted access to all hardware.

Think of Kernel Space as the system’s inner sanctum or control room. Operations executed here are considered highly trusted and have the potential to affect the entire system. Direct access to hardware and the ability to execute any CPU instruction are hallmarks of this space.

Because of this power, only the kernel and carefully vetted system components are allowed to run in Kernel Space. This isolation protects the core OS functions from interference by user applications, preventing accidental damage or malicious attacks from easily compromising the entire system.

User Space (Where Applications Live)

What is User Space? It’s the area of memory where all user applications and standard system utilities execute. Programs running in User Space operate with much lower privileges compared to the kernel. They cannot directly access hardware or critical system resources.

This is the environment where your web browser, word processor, games, and other applications run. If an application in User Space needs to perform a privileged action (like writing to disk or accessing the network card), it must request the kernel’s help via a system call.

This restriction is a vital safety measure. If an application crashes or misbehaves in User Space, it typically only affects that single application, not the entire operating system. The kernel remains protected in its separate space, ensuring system stability.

Kernel Mode vs. User Mode (CPU Privilege Levels)

Closely related to Kernel Space and User Space are the CPU’s operational modes. Most modern processors support at least two privilege levels: Kernel Mode and User Mode. These modes dictate what instructions the CPU is allowed to execute at any given moment.

When the CPU is running kernel code (operating in Kernel Space), it’s in Kernel Mode (also called supervisor mode or privileged mode). In this mode, the CPU has complete, unrestricted access to all hardware and can execute any instruction, including those that manage memory or control devices.

Conversely, when the CPU is executing application code (in User Space), it’s in User Mode (or non-privileged mode). Here, the CPU restricts access to hardware and certain instructions. If an application attempts a forbidden operation, the CPU generates an exception, often transferring control to the kernel.

The transition from User Mode to Kernel Mode happens during system calls, interrupts (hardware signals), or exceptions (errors). The kernel handles the request or event in Kernel Mode and then transitions the CPU back to User Mode to continue the application. This controlled transition is fundamental to OS security.

Exploring Different Kernel Types (Architectures)

While all kernels perform similar core functions, they aren’t all designed the same way. Kernel architecture refers to the way the kernel is structured and how its different components interact. Different architectures offer trade-offs in terms of performance, modularity, size, and complexity.

The main types you’ll encounter are Monolithic, Microkernel, and Hybrid kernels. Each approach has distinct advantages and disadvantages, influencing the characteristics of the resulting operating system. Let’s explore these common kernel architectures.

1. Monolithic Kernels: The All-in-One Design

What is a Monolithic Kernel? It’s an architecture where the entire OS – including the scheduler, memory management, file systems, device drivers, and network stacks – runs together as a single large program in Kernel Space. All components can directly call each other.

This design was common in early operating systems like Unix. The primary advantage is performance. Because all components reside in the same address space (Kernel Space), communication between them is very fast, often just involving simple function calls.

However, the main disadvantage is lack of modularity and fault isolation. A bug in one component (like a faulty device driver) can potentially crash the entire kernel and thus the whole system. The codebase can also become very large and complex to manage and debug.

Modern monolithic kernels, like the Linux kernel, often mitigate these issues by being highly modular. They allow parts like device drivers and file systems to be loaded and unloaded dynamically as needed (Loadable Kernel Modules – LKMs), offering flexibility despite the core monolithic structure.

2. Microkernels: Minimalist and Modular

What is a Microkernel? It’s an architecture that aims to keep the code running in privileged Kernel Space as small and simple as possible. Only the absolute essential functions – typically basic process scheduling, memory management primitives, and inter-process communication (IPC) – reside in the kernel itself.

Other OS services, such as device drivers, file systems, network protocols, and server processes, run as separate processes in User Space. These user-space services communicate with each other and the minimal kernel through a well-defined IPC mechanism.

The key advantage is modularity and enhanced stability/security. If a user-space service like a device driver crashes, it typically doesn’t bring down the entire kernel or system. Services can often be updated or replaced without rebooting. The smaller kernel codebase can be easier to verify and secure.

The main drawback is potential performance overhead. Communication between user-space services via IPC is inherently slower than direct function calls within a monolithic kernel. Designing efficient IPC mechanisms is crucial for microkernel performance. Examples include QNX and early versions of Mach.

3. Hybrid Kernels: A Balanced Approach

What is a Hybrid Kernel? It’s an architecture that attempts to combine the performance benefits of monolithic kernels with the modularity and stability advantages of microkernels. They are often structured like microkernels but keep more services running in Kernel Space for speed.

Hybrid kernels typically have a minimal core like a microkernel but allow additional components (like file systems, graphics drivers, or networking stacks) to also run in Kernel Space for faster execution. They still rely on IPC for some communication but less extensively than pure microkernels.

This approach aims to strike a balance. By keeping performance-critical components in Kernel Space, they can avoid some of the overhead associated with pure microkernels. However, they may sacrifice some of the fault isolation benefits, as bugs in kernel-space components can still affect system stability.

Many modern, widely used operating systems employ hybrid designs. Notable examples include the Windows NT kernel (used in Windows 10, 11, Server) and Apple’s XNU kernel (used in macOS, iOS, watchOS, tvOS), which blends Mach microkernel concepts with components from BSD (a monolithic Unix variant).

(Optional) Other Architectures (Brief Mention)

Beyond the main three, other less common kernel architectures exist, often tailored for specific needs or research:

- Nanokernel: An even more extreme version of a microkernel, aiming for the absolute minimum code in privileged mode, sometimes only handling hardware interrupts.

- Exokernel: Provides minimal hardware abstraction, focusing on securely multiplexing hardware resources. Applications link with library OSes to implement higher-level abstractions.

- Unikernel: A specialized approach where application code and required OS libraries are compiled together into a single-purpose, minimal image designed to run directly on hardware or a hypervisor, often used in cloud environments.

Real-World Kernel Examples: Where You Find Them

Kernels aren’t just theoretical concepts; they are the beating heart of the operating systems you interact with every day on your laptops, phones, servers, and embedded devices. Recognizing the kernels behind popular OSes helps illustrate the practical application of these different architectures.

Let’s look at a few prominent examples:

The Linux Kernel

Perhaps the most famous example, the Linux kernel is open-source and forms the foundation for countless Linux distributions (like Ubuntu, Debian, Fedora, CentOS), the Android mobile OS, Chrome OS, and powers a vast majority of the world’s servers, supercomputers, and embedded systems.

Developed initially by Linus Torvalds in 1991, it follows a monolithic architecture but is highly modular. This means while the core services run in Kernel Space, components like device drivers and file systems can be dynamically loaded or unloaded as modules, providing great flexibility and hardware support.

Its open-source nature allows for constant development and contribution from a global community. This has led to its widespread adoption and adaptation for diverse hardware platforms, from tiny sensors to massive mainframes. Its stability and performance are well-regarded.

Windows NT Kernel

The kernel underpinning all modern versions of Microsoft Windows (since Windows NT 3.1, including Windows XP, 7, 8, 10, 11, and Windows Server variants) is the Windows NT Kernel. It is a proprietary kernel developed by Microsoft.

The NT Kernel employs a hybrid architecture. It features a core executive running in Kernel Space that handles essential services, but many other components, including environmental subsystems (like the Win32 subsystem), run in User Space. Performance-critical drivers often run in Kernel Space.

This design aims to balance performance with robustness and compatibility. While not open-source, its internal workings are extensively documented (e.g., in the “Windows Internals” book series), providing insight into its complex structure and capabilities.

Apple’s XNU Kernel (macOS/iOS)

Apple’s operating systems – macOS, iOS, iPadOS, watchOS, and tvOS – are all built upon the XNU kernel. XNU stands for “X is Not Unix,” although it incorporates significant parts of Unix, specifically from the Berkeley Software Distribution (BSD).

XNU is also a hybrid kernel. It combines the Mach microkernel (developed at Carnegie Mellon University) for core functions like IPC, virtual memory, and scheduling, with components from FreeBSD (a BSD variant) for file systems, networking, and compliance with standards like POSIX.

Apple has made significant parts of XNU open-source. This hybrid approach allows Apple to leverage the modularity concepts from Mach while integrating mature, robust components from the BSD world, forming the foundation of their ecosystem’s performance and features.

Why is the Kernel So Critically Important?

By now, it should be clear that the kernel isn’t just a part of the operating system; it’s arguably the most fundamental part. Its importance cannot be overstated – without a functioning kernel, a general-purpose computer simply cannot operate as intended.

Let’s summarize why the kernel holds such a critical position:

- Hardware Abstraction: The kernel hides the complexities of diverse hardware from applications. Software developers don’t need to write code specific to every possible CPU, disk drive, or network card; they write to the kernel’s consistent interface (via system calls).

- Resource Management: It ensures fair and efficient sharing of limited system resources like CPU time, memory, and I/O devices among potentially many competing processes. This prevents chaos and optimizes system throughput.

- Security Foundation: The kernel enforces security boundaries between processes and between user applications and the system itself (via kernel/user space separation and permissions). It protects the system from accidental errors and malicious software.

- Enabling Multitasking: Through process management and scheduling, the kernel allows multiple applications to run concurrently, providing the responsive, multi-application environment users expect from modern computers.

- System Stability: By managing resources carefully and isolating processes, the kernel contributes significantly to overall system stability. A well-designed kernel can prevent bugs in one application from crashing the entire OS.

When the kernel encounters an unrecoverable error, it often triggers a “kernel panic” (on Unix-like systems) or a “Blue Screen of Death” (on Windows). This halts the entire system to prevent further damage, underscoring the kernel’s absolutely critical role. It truly is the foundation upon which everything else is built.

Frequently Asked Questions (FAQ) about Kernels

Here are answers to some common questions people have about kernels:

- Q1: What is the difference between a kernel and an operating system? The kernel is the core component of the operating system. The OS includes the kernel plus other system software like shells (command-line interfaces), graphical user interfaces (GUIs), system utilities (like file managers), and standard libraries. Think kernel = engine, OS = entire car.

- Q2: Is the kernel hardware or software? The kernel is entirely software. It’s a complex program (or set of programs) that manages the physical hardware components, but it is not hardware itself.

- Q3: Where is the kernel located in memory? When the system is running, the kernel resides in a protected area of main memory (RAM), known as Kernel Space. This prevents user applications from accidentally overwriting or corrupting it.

- Q4: Can an operating system run without a kernel? For any general-purpose operating system designed to run multiple applications and manage hardware (like Windows, macOS, Linux, Android, iOS), the answer is essentially no. The kernel provides the fundamental services required. Highly specialized systems (like some embedded firmware or unikernels) might blur the lines, but they still contain kernel-like functionality.

Conclusion: The Unsung Hero of Your Computer

We’ve journeyed deep into the heart of the operating system to uncover the kernel. We’ve seen that it’s the central software component acting as the vital intermediary between your hardware and software applications. It’s the master manager, the security guard, and the communication facilitator all rolled into one.

From managing which programs get CPU time (process management) and how memory is shared (memory management), to enabling software to talk to peripherals (device management) and providing a secure way for applications to request services (system calls), the kernel’s responsibilities are vast and critical.

We explored how the separation of Kernel Space and User Space protects the system, and how different architectures like monolithic, microkernel, and hybrid designs offer various trade-offs. We saw real-world examples in Linux, Windows, and macOS/iOS, demonstrating the kernel’s ubiquitous presence.

Though it operates silently in the background, the kernel is undeniably the foundation upon which our entire digital experience is built. It’s the unsung hero ensuring stability, security, and functionality, making it possible for us to work, play, and connect using our complex computing devices. Understanding the kernel truly means understanding the core of computing itself.