Full virtualization is a cornerstone of modern computing, enabling multiple operating systems to run independently on a single physical machine. By leveraging hypervisors and advanced hardware assistance like Intel VT-x and AMD-V, this technology ensures seamless performance and isolation. This article explores how full virtualization works, its core components, key advantages, and how it compares to other virtualization methods, helping you understand its role in today’s IT infrastructure.

Full virtualization Definition

Full virtualization is a technique allowing a complete, unmodified operating system (like Windows or Linux) to run inside a simulated computer environment, known as a Virtual Machine or VM. This approach cleverly tricks the guest operating system into behaving exactly as if it were running directly on physical hardware, requiring zero changes to the OS itself.

Think of the core component, the hypervisor (also called a Virtual Machine Monitor or VMM), acting like a meticulous translator between the guest OS and the actual physical server hardware. It creates and manages the VM, presenting it with a standard set of virtual hardware. This removes the need for the guest OS to be specially adapted, ensuring wide compatibility.

This technology essentially provides a fully self-contained digital replica of a computer. The hypervisor diligently intercepts hardware requests from the guest OS, managing access to the underlying physical resources like CPU power and memory. This careful management ensures that multiple VMs can run securely and in isolation on a single physical machine, without interfering with each other.

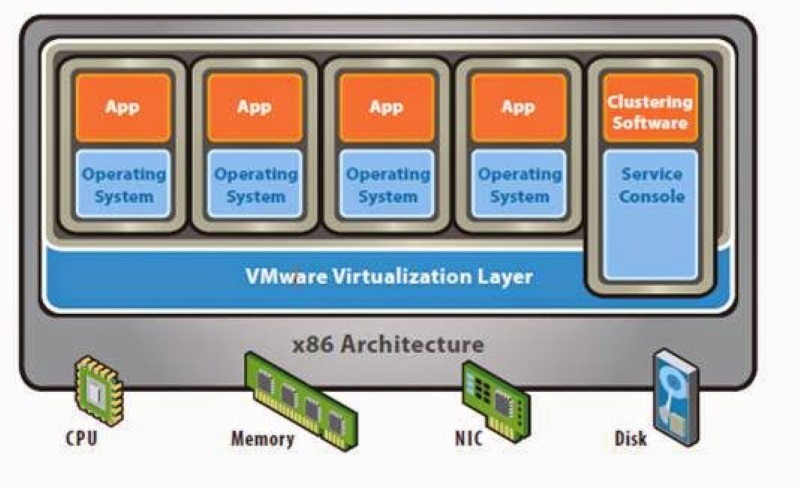

You see this principle in action with popular technologies. For instance, KVM (Kernel-based Virtual Machine) in Linux, VMware’s ESXi server hypervisor, and Microsoft’s Hyper-V all utilize full virtualization. They enable data centers and even desktop users to run diverse operating systems simultaneously, offering tremendous flexibility and efficient hardware use. This makes it a foundational technology for modern cloud computing and server management.

Core Mechanism of Full Virtualization: How It Actually Works

The core mechanism involves a specialized software layer, the hypervisor, intercepting hardware-sensitive commands from the guest operating system (OS). It manages these requests, often leveraging dedicated CPU features like Intel VT-x or AMD-V, allowing the unmodified guest OS to run correctly without direct hardware access, ensuring stability and isolation.

The Challenge: Managing Direct Hardware Access

Operating systems are inherently designed assuming they have exclusive control over the computer’s hardware. They frequently issue special, sensitive commands known as privileged instructions to manage resources directly. If multiple operating systems attempted this simultaneously on the same physical machine, conflicts and system crashes would inevitably occur, preventing reliable operation.

This potential chaos highlights the fundamental challenge: how do you safely share hardware among multiple, independent operating systems? Without a sophisticated management layer, resource contention and instability would be unavoidable. This is precisely the problem that hypervisor technology, the engine behind full virtualization, was created to solve effectively and securely.

The Hypervisor’s Role: Interception and Emulation Explained

A classic technique hypervisors use is “trap-and-emulate.” When the guest OS tries to execute a privileged instruction, the CPU hardware itself ‘traps’ this attempt. This action immediately pauses the guest’s execution and transfers control directly to the hypervisor (also known as a Virtual Machine Monitor or VMM).

Once alerted, the hypervisor examines the instruction the guest OS tried to run. It then either performs the required action safely on the guest’s behalf or accurately simulates the hardware’s response. Crucially, the guest OS remains completely unaware of this complex interception and translation process happening discreetly in the background.

While foundational, these purely software-based methods did come with a cost. Techniques like trap-and-emulate or binary translation required significant processing by the hypervisor for sensitive operations. This historically resulted in noticeable performance limitations, making it difficult to run highly demanding applications efficiently within early virtualized environments.

The Power of Hardware Assistance (Intel VT-x / AMD-V)

Modern virtualization efficiency owes a great deal to dedicated hardware extensions built into CPUs, such as Intel VT-x and AMD-V. These features aren’t just minor improvements; they provide fundamental processor-level support specifically designed to accelerate virtualization tasks and reduce the hypervisor’s software overhead significantly.

These extensions allow the guest OS to run the vast majority of its instructions directly on the physical processor safely and at near-native speed. Only the most sensitive instructions automatically trigger a fast, hardware-managed transition to the hypervisor for handling, eliminating much of the previous software complexity.

This processor-level support dramatically reduces the hypervisor’s workload in managing the guest OS. The direct result is significantly better performance, lower latency, and improved overall system responsiveness, making full virtualization practical and efficient even for resource-intensive enterprise applications and cloud computing workloads today.

Key Characteristics of Full Virtualization

Full virtualization’s defining traits include its capacity to run unmodified operating systems, deliver strong VM isolation via the hypervisor, provide hardware independence, potentially introduce performance overhead, and heavily rely on modern hardware assistance features.

Here are the core characteristics that define how full virtualization operates:

- Unmodified OS Support: Its hallmark is running standard, off-the-shelf operating systems (like Windows or Linux distributions) without any changes. The OS thinks it’s on dedicated physical hardware.

- Strong Isolation: Each virtual machine (VM) functions within a securely separated boundary. This means a failure or security issue in one VM is contained and won’t typically affect others.

- Hardware Independence: Guest OSs interact with a standardized layer of virtual hardware managed by the hypervisor, not directly with the varied physical components, simplifying deployment and migration.

- Hypervisor Dependency: The entire virtualization process hinges on the hypervisor software (like KVM, VMware ESXi). This critical component manages all resource allocation and controls VM execution.

- Performance Considerations: While highly efficient, there’s typically a slight performance difference compared to running directly on physical hardware (‘bare metal’) due to the hypervisor’s management layer.

- Reliance on Hardware Assists: Modern full virtualization heavily depends on CPU features like Intel VT-x and AMD-V. These significantly accelerate performance by handling key operations in hardware.

Essential Components in a Full Virtualization Setup

A full virtualization setup comprises several key interacting parts: the core hypervisor software, the virtual machines (VMs) it creates, the underlying physical hardware (host system), and the distinct operating systems involved – both host (sometimes) and guest. These components work together seamlessly to enable virtualization.

The Hypervisor (Virtual Machine Monitor – VMM): The Core Engine

The hypervisor, sometimes called a Virtual Machine Monitor (VMM), is the fundamental software or firmware layer that makes full virtualization possible. It sits directly on the hardware (Type 1) or on a host OS (Type 2), creating and managing the virtual machines. Think of it as the central engine driving the entire process.

Its critical jobs include allocating physical hardware resources like CPU time, memory, and storage to the various VMs running above it. The hypervisor also intercepts and manages sensitive hardware requests from guest operating systems, ensuring each VM operates correctly and remains isolated from the others sharing the same physical host.

The Virtual Machine (VM): Your Digital Computer Replica

A Virtual Machine (VM) is the self-contained digital environment crafted by the hypervisor. You can accurately picture it as a complete computer system, but one simulated entirely in software code. It contains virtual versions of all the hardware components necessary for an operating system to function properly within its confines.

Inside each VM, the hypervisor provides dedicated virtual resources. This includes a virtual CPU (vCPU), virtual memory (vRAM), virtual disk storage, and virtual network connections (vNICs). This complete package creates the isolated execution space required to install and run a guest operating system just as you would on separate physical hardware.

Host vs. Guest Operating Systems (OS): Understanding the Layers

It’s crucial to differentiate the operating systems involved. The “guest OS” is any operating system (like Windows Server or Ubuntu Linux) installed and running inside a virtual machine. It operates believing it has direct hardware access, though it’s actually interacting only with the VM’s virtual hardware provided by the hypervisor.

The “host OS” refers to the operating system running directly on the physical machine’s hardware. This is most relevant for Type 2 hypervisors (like VirtualBox or VMware Workstation), where the hypervisor software itself runs as an application on top of an existing OS like Windows 10 or macOS.

With Type 1 (bare-metal) hypervisors such as VMware ESXi or Microsoft Hyper-V Server, the hypervisor essentially is the host, running directly on the hardware. In this scenario, the primary relationship is simply between the specialized hypervisor layer and the guest OSs running within the VMs it directly manages on the physical server.

Why Hardware Assistance (Intel VT-x / AMD-V) is Crucial Today

Hardware assistance, like Intel VT-x and AMD-V technologies, is crucial because it massively improves virtualization performance. These features allow the CPU itself to handle tasks that previously required slower software intervention by the hypervisor, making virtualization much faster.

Before widespread hardware support, hypervisors used purely software methods like trap-and-emulate. This consumed significant CPU overhead, acting as a bottleneck that limited the speed and number of virtual machines (VMs) a host could effectively run simultaneously.

Intel VT-x and AMD-V introduced new processor operating modes and instructions specifically for virtualization. They enable the guest OS code to execute directly on the CPU most of the time, under secure hardware supervision, bypassing much software emulation.

This dramatically reduces the hypervisor’s direct involvement in managing guest instructions. Sensitive operations are trapped and handled efficiently by the CPU hardware itself, resulting in near-native performance for many tasks within the virtual machine environment.

By offloading work to the processor, hardware assistance lowers the hypervisor’s CPU usage. This allows for greater VM density—meaning more virtual machines can run efficiently on the same physical server hardware without performance degradation.

Full Virtualization Hypervisors: Exploring Type 1 vs. Type 2

Full virtualization relies on hypervisors, which mainly fall into two categories. Type 1, or “bare-metal,” installs directly on hardware. Type 2, known as “hosted,” runs as software on top of an existing operating system (OS).

Understanding this distinction is vital. It directly influences factors like overall system performance, scalability, ease of management, and the typical environments where each type excels, whether powering data centers or running on a personal computer.

Type 1 Hypervisors (Bare-Metal): Direct Hardware Control

Type 1 hypervisors are installed straight onto the physical server’s hardware, completely replacing or acting as the primary operating system. Their sole purpose is efficiently creating, running, and managing virtual machines with minimal overhead.

Because they interface directly with hardware resources without a full conventional OS underneath, Type 1 solutions generally provide superior performance and scalability. This makes them the standard choice for demanding enterprise data centers and cloud infrastructure.

Prominent examples include VMware vSphere ESXi, Microsoft Hyper-V (especially in Windows Server), and the Kernel-based Virtual Machine (KVM) built into Linux. These robust platforms form the foundation for most large-scale server virtualization deployments worldwide.

Type 2 Hypervisors (Hosted): Running on an Existing OS

Type 2 hypervisors operate differently. They install and run just like regular application software on top of a standard host operating system, such as Windows 10, macOS, or a Linux desktop distribution you already use.

Their main advantage is often simplicity and ease of setup for end-users. They are ideal for developers, testers, or anyone needing to run different operating systems occasionally on their personal workstation without dedicated server hardware.

Familiar examples include Oracle VM VirtualBox, VMware Workstation (for Windows/Linux), and VMware Fusion (for macOS). While convenient, they typically introduce slightly more performance overhead because requests must pass through the host OS layer.

Full Virtualization vs. Other Technologies: Key Differences

Full virtualization primarily differs from paravirtualization and containerization in its approach to the operating system. It uniquely runs completely unmodified guest operating systems, unlike PV (modified OS) or containers (shared host OS kernel).

Understanding these key distinctions is essential for selecting the appropriate technology. Each method presents unique advantages and disadvantages concerning performance characteristics, operating system compatibility, resource density, startup speed, and the level of isolation provided between instances.

Full Virtualization vs. Paravirtualization (PV): The OS Modification Factor

The defining difference lies here: full virtualization uses techniques like hardware assistance to run any standard, stock OS. Paravirtualization (PV), conversely, necessitates a guest OS with a modified kernel, specifically altered to work with the hypervisor.

In paravirtualization, the modified guest OS actively cooperates with the hypervisor. It uses special communication calls (“hypercalls”) for sensitive operations, aiming to reduce virtualization overhead by avoiding complex hardware emulation found in purely software-based full virtualization.

While PV (like that used in early Xen versions) could offer performance advantages historically, modern hardware assistance makes full virtualization very efficient. The core trade-off remains broad compatibility versus potential optimization gained through direct OS-hypervisor communication.

Full Virtualization vs. OS-Level Virtualization (Containerization): Kernel Sharing vs. Full OS

This comparison highlights a fundamental architectural split. Full virtualization provides each virtual machine (VM) with its own isolated, complete OS kernel. Containerization, often called OS-level virtualization, takes a dramatically different approach.

Containers (popularized by tools like Docker and LXC) cleverly share the host machine’s operating system kernel. They only isolate application processes and their dependencies within user-space partitions, not entire operating system instances like VMs do.

This shared kernel model makes containers extremely lightweight and fast to launch. However, full virtualization provides significantly stronger isolation boundaries and the crucial flexibility to run entirely different types of operating systems within separate VMs.

Advantages of Using Full Virtualization: The Benefits

Full virtualization delivers significant advantages, notably its unrivaled operating system compatibility, robust security via strong VM isolation, simplified workload migration capabilities, and the backing of mature, widely supported technology platforms. These benefits make it highly versatile.

- Unmatched Operating System Compatibility Its ability to run virtually any standard x86 operating system (Windows, numerous Linux versions, BSD, etc.) without modification is a primary benefit, offering maximum flexibility for diverse IT environments.

- Robust Security Through Strong Isolation The hypervisor enforces strict boundaries between virtual machines. This powerful isolation means security issues or crashes within one VM are typically contained and don’t affect others on the same physical host.

- Simplified Migration and Hardware Abstraction Because VMs see standardized virtual hardware, moving them between physical servers (V2V migration) is much easier. Converting physical machines to virtual ones (P2V) is also streamlined considerably.

- Mature and Widely Supported Technology Full virtualization is a well-understood and battle-tested technology. This maturity translates into stable platforms, extensive community knowledge, broad vendor support, and readily available expertise for management.

Disadvantages and Considerations of Full Virtualization

However, full virtualization also has considerations. These mainly include potential performance overhead compared to physical hardware, higher resource demands per virtual machine instance, and a critical reliance on the underlying hypervisor’s stability.

- Understanding Performance Overhead The hypervisor layer introduces additional processing steps. While modern hardware assistance minimizes this, VMs may still run slightly slower than bare metal, particularly for I/O-heavy workloads.

- Higher Resource Consumption per Instance Each VM encapsulates an entire operating system kernel, demanding substantial RAM, CPU, and storage resources. This typically results in lower VM density compared to containerization techniques.

- Dependence on Hypervisor Stability The hypervisor is the foundation for all running VMs. Any flaw, bug, or crash in the hypervisor software itself could potentially impact every single virtual machine operating on that host system.