Kubernetes (K8s) has revolutionized how we deploy and manage applications. This comprehensive guide explores what Kubernetes is, its key benefits (from automation to resilience), a detailed comparison with Docker, and its wide range of applications, including microservices, CI/CD, and cloud-native deployments. Dive in to understand this essential container orchestration platform.

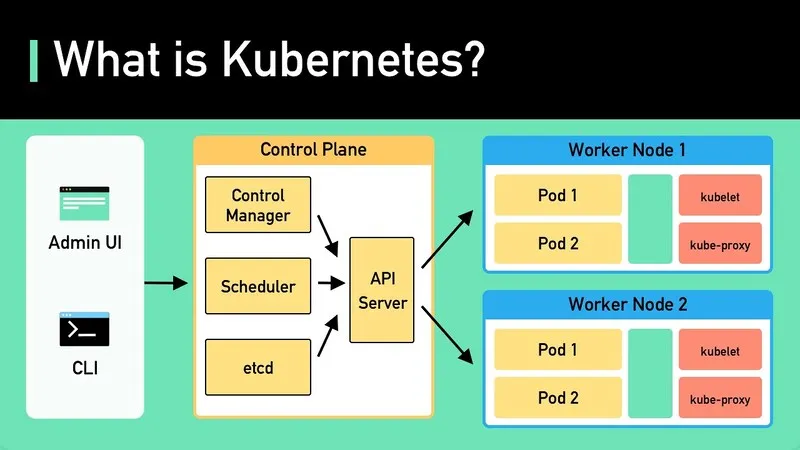

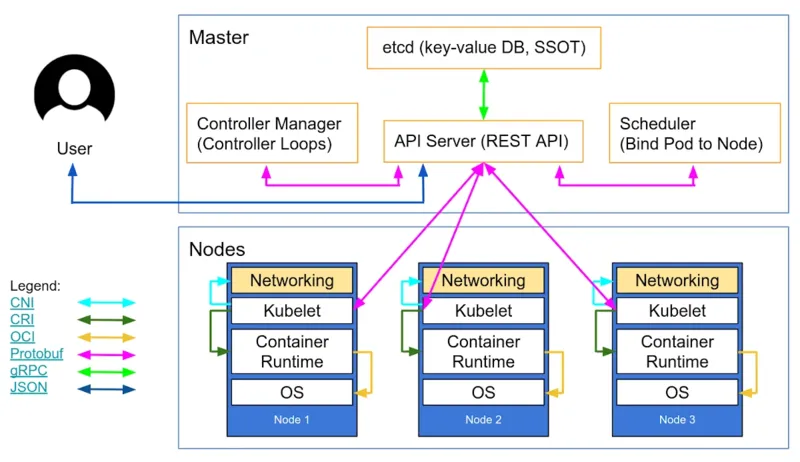

What is Kubernetes?

Kubernetes, often shortened to K8s, is an open-source container orchestration platform. It automates the deployment, scaling, and management of containerized applications. Think of it as a conductor for your application’s containers, ensuring they run smoothly and efficiently.

Kubernetes was originally designed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). This heritage, stemming from Google’s internal “Borg” system, gives Kubernetes a strong foundation in managing applications at massive scale. It’s built to handle the complexities of modern, cloud-native applications, especially those built using a microservices architecture.

Instead of manually deploying and managing individual containers, Kubernetes allows you to define the desired state of your application. For instance, you might specify that you need five instances of a web server container running at all times. Kubernetes then takes over, automatically deploying those containers, monitoring their health, and restarting them if they fail. This is known as declarative configuration, and it’s a core principle of how Kubernetes operates. This declarative approach contrasts with older, imperative methods where you had to explicitly script every step of the deployment process.

The power of Kubernetes comes from its ability to abstract away the underlying infrastructure. Whether you’re running your applications on a single server, a cluster of on-premises machines, or across multiple cloud providers like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), or Azure Kubernetes Service (AKS), Kubernetes provides a consistent way to manage them. This portability is a key advantage

One key problem Kubernetes address, is the manual process. Before container orchestration, system administrators often had to manually start, stop, and scale applications. This was time-consuming, error-prone, and difficult to manage, especially at scale. Kubernetes automates these processes.

Consider a real-world example: an e-commerce website. During a flash sale, traffic might increase tenfold. Kubernetes can automatically scale up the number of web server containers to handle the increased load, then scale them back down when the sale is over. This scalability is crucial for modern applications that need to adapt to fluctuating demands. Without Kubernetes, this scaling would require significant manual intervention, potentially leading to slow response times or even website outages during peak periods.

Another critical benefit is resiliency. If a container or even an entire server fails, Kubernetes automatically reschedules the affected containers onto healthy nodes. This self-healing capability ensures that your applications remain available even in the face of hardware or software failures. Imagine the e-commerce website again – if one server goes down, Kubernetes seamlessly moves the traffic to other, healthy servers, preventing any disruption to the customer experience.

Kubernetes achieves this automation and resilience through a set of core components. These include Pods (the smallest deployable units, containing one or more containers), Services (which provide a stable endpoint to access Pods), Deployments (which manage the desired state of Pods), and Nodes (the physical or virtual machines that run the containers). These components, along with others like ConfigMaps and Secrets, work together to form a robust and flexible platform for managing containerized applications. These terms will be defined later. Understanding these core components are fundamental in learning Kuberenetes.

What are the Benefits of Kubernetes?

Kubernetes offers numerous advantages for managing containerized applications, primarily by automating deployment, scaling, and operations. This translates to increased efficiency, reduced costs, and improved application reliability. It simplifies complex tasks, making it a cornerstone of modern cloud-native development.

Automation and Efficiency

Kubernetes excels at automating many manual processes involved in managing applications. Tasks that used to require significant administrator intervention, such as deploying new versions of software, scaling applications up or down, and handling failures, are now handled automatically. This automation frees up valuable time for developers and operations teams, allowing them to focus on building and improving applications rather than managing infrastructure. For example, instead of manually configuring servers for each new application release, developers can define the desired state in a Kubernetes configuration file, and Kubernetes takes care of the rest.

Scalability and Resource Optimization

One of the most significant benefits of Kubernetes is its ability to scale applications on demand. Whether you need to handle a sudden surge in traffic or gradually scale up as your user base grows, Kubernetes can automatically adjust the number of running containers to meet the demand. This is achieved through features like Horizontal Pod Autoscaling (HPA), which automatically increases or decreases the number of pods based on resource utilization (e.g., CPU or memory usage). This dynamic scaling ensures optimal performance while also optimizing resource utilization, preventing over-provisioning and reducing infrastructure costs. For instance, an online retailer can use Kubernetes to automatically scale up its web servers during a Black Friday sale and then scale them back down afterward, paying only for the resources they actually use.

High Availability and Resilience

Kubernetes is designed for high availability and resilience. Its self-healing capabilities ensure that applications remain available even in the face of failures. If a container crashes or a node goes down, Kubernetes automatically reschedules the affected containers onto healthy nodes. This is achieved through features like ReplicaSets, which ensure that a specified number of replicas (copies) of a pod are always running. This redundancy eliminates single points of failure and minimizes downtime. For example, if a database server running in a Kubernetes cluster fails, Kubernetes will automatically restart the database container on another node, ensuring continuous operation.

Portability and Flexibility

Kubernetes provides a consistent platform for deploying and managing applications across a variety of environments. Whether you’re running on-premises, in the public cloud (using services like GKE, EKS, or AKS), or in a hybrid cloud environment, Kubernetes provides a unified way to manage your applications. This portability eliminates vendor lock-in and gives you the flexibility to choose the infrastructure that best suits your needs. You can even move applications between different cloud providers with minimal effort.

Simplified Microservices Management

Kubernetes is particularly well-suited for managing applications built using a microservices architecture. Microservices involve breaking down a large application into smaller, independent services that communicate with each other. Kubernetes provides the tools and abstractions needed to deploy, manage, and scale these individual services independently. Features like service discovery and load balancing make it easy for microservices to find and communicate with each other, even as they scale and move around within the cluster. This simplifies the development and operation of complex, distributed applications.

DevOps Enablement

Kubernetes plays a crucial role in enabling DevOps practices. It facilitates continuous integration and continuous delivery (CI/CD) pipelines by automating the deployment and testing of new code. Developers can use Kubernetes to quickly and easily deploy new versions of their applications, test them in a production-like environment, and roll them back if necessary. This accelerates the software development lifecycle and allows for faster iteration and innovation. Tools like Helm (a package manager for Kubernetes) further streamline the deployment process.

Kubernetes vs. Docker

Kubernetes and Docker are often mentioned together, but they serve distinct purposes. Docker is a containerization platform used to package, distribute, and run applications within containers. Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of those containers. They are complementary, not competing, technologies.

Think of it this way: Docker is like a shipping container that holds your application and its dependencies. Kubernetes is like the port authority that manages all the shipping containers, deciding where they go, how many are needed, and ensuring they arrive safely. You can use Docker without Kubernetes, but you’ll lose the automation and scaling benefits. You typically wouldn’t use Kubernetes without a container runtime like Docker.

A common point of confusion is the relationship between Kubernetes and Docker Swarm. Docker Swarm is a container orchestration tool, directly comparable to Kubernetes. It’s built by Docker and is more tightly integrated with the Docker ecosystem, but it generally has fewer features and a smaller community than Kubernetes. Kubernetes has become the de facto standard for container orchestration.

To clarify the differences and relationships, let’s look at a detailed comparison:

| Feature | Docker | Kubernetes | Docker Swarm |

|---|---|---|---|

| Primary Function | Containerization (packaging and running applications) | Container Orchestration (managing containers) | Container Orchestration (managing containers) |

| Scope | Single container or small groups of containers | Large-scale, distributed applications | Smaller-scale, less complex applications |

| Scalability | Manual scaling (using docker run multiple times) |

Automated scaling (Horizontal Pod Autoscaler) | Automated scaling (service scaling) |

| High Availability | Limited (requires manual intervention) | Built-in (self-healing, replication) | Built-in (service replication) |

| Networking | Basic networking within a single host | Advanced networking (service discovery, load balancing, ingress) | Basic networking (overlay networks) |

| Storage | Basic volume management | Advanced storage management (persistent volumes, claims) | Basic volume management |

| Deployment | Manual deployment using docker run |

Declarative deployments (YAML files) | Declarative service deployments (YAML files) |

| Rollouts/Rollbacks | Manual | Automated rollouts and rollbacks | Automated rollouts and rollbacks |

| Complexity | Simpler to learn and use | More complex, steeper learning curve | Simpler than Kubernetes, more complex than Docker |

| Community & Ecosystem | Large and active | Largest and most active | Smaller and less active |

| Industry Adoption | Widely adopted for containerization | The de facto standard for container orchestration | Less widely adopted than Kubernetes |

| Vendor Support | Supported by Docker, Inc. | Supported by CNCF and major cloud providers | Supported by Docker, Inc. |

Examples:

- Docker Only: A developer might use Docker to package a simple web application and run it locally on their laptop for testing.

- Docker + Kubernetes: An e-commerce company uses Docker to package each of its microservices (e.g., product catalog, shopping cart, payment processing) and then uses Kubernetes to deploy, scale, and manage those services across a cluster of servers in the cloud.

- Docker + Docker Swarm: A small startup might use Docker Swarm to orchestrate a handful of containers running a simple web application and database on a few servers.

What is Kubernetes Used For?

Kubernetes is primarily used for deploying, managing, and scaling containerized applications. It’s a powerful platform that simplifies the complexities of running applications in modern, distributed environments. Beyond this core function, it supports a wide range of use cases, especially within cloud-native and microservices architectures.

Managing Microservices Architectures

Kubernetes is exceptionally well-suited for managing microservices. Microservices are a software development approach where a large application is broken down into smaller, independent services that communicate with each other. Each service can be developed, deployed, and scaled independently. Kubernetes provides the infrastructure and tools to manage these numerous, interacting services, including service discovery, load balancing, and configuration management. For example, a streaming service like Netflix might use Kubernetes to manage hundreds of microservices responsible for different aspects of the platform, such as video encoding, recommendations, and user authentication.

Continuous Integration and Continuous Delivery (CI/CD)

Kubernetes plays a vital role in enabling CI/CD pipelines. CI/CD is a software development practice that emphasizes automating the building, testing, and deployment of applications. Kubernetes provides a consistent and automated way to deploy new versions of software, test them in a production-like environment, and roll them back if necessary. This dramatically speeds up the development cycle and allows for more frequent releases. Tools like Jenkins, GitLab CI, and Argo CD integrate seamlessly with Kubernetes to create robust CI/CD workflows.

Cloud-Native Application Deployment

Kubernetes has become the de facto standard for deploying cloud-native applications. Cloud-native applications are designed to be run in the cloud, taking advantage of the scalability, resilience, and flexibility of cloud platforms. Kubernetes provides the necessary abstractions to deploy and manage these applications regardless of the underlying cloud provider (e.g., AWS, Google Cloud, Azure). This portability prevents vendor lock-in and allows organizations to choose the best cloud environment for their needs.

Hybrid and Multi-Cloud Deployments

Kubernetes facilitates hybrid and multi-cloud deployments. Organizations can use Kubernetes to manage applications that span across on-premises data centers and multiple public cloud providers. This gives organizations the flexibility to run workloads where they make the most sense, whether for cost, performance, or compliance reasons. Tools like Kubernetes Federation (though less common now) and multi-cluster management solutions enable this unified management.

Scaling Web Applications and APIs

Kubernetes is commonly used to scale web applications and APIs. Its ability to automatically adjust the number of running containers based on demand ensures that applications remain responsive even under heavy load. This is particularly important for applications that experience fluctuating traffic patterns, such as e-commerce websites, social media platforms, and online gaming services.

Batch Processing and Data Science Workloads

Beyond web applications, Kubernetes is also used for batch processing and data science workloads. Jobs and CronJobs in Kubernetes allow you to run containers that perform specific tasks, such as processing large datasets, training machine learning models, or generating reports. This makes Kubernetes a versatile platform for a wide range of applications beyond just serving web traffic.

Infrastructure as Code (IaC)

Kubernetes promotes Infrastructure as Code (IaC). IaC is the practice of managing infrastructure through code, rather than manual configuration. Kubernetes’ declarative configuration model, where you define the desired state of your applications in YAML files, is a perfect example of IaC. This allows for version control, repeatability, and automation of infrastructure management.